As robots become increasingly integrated into various facets of human life, the need for them to understand, respond to, and even simulate human emotions becomes paramount. Whether in healthcare, customer service, or personal companionship, the ability of robots to engage emotionally can significantly enhance the quality of human-robot interaction. This article explores how robots are designed to understand human emotions, how they can respond to these emotions, and how they simulate emotions to create more engaging, intuitive, and meaningful interactions. By combining advanced artificial intelligence (AI) with emotional intelligence, robots can foster more natural relationships with humans, moving beyond mere mechanical assistance to become companions capable of empathy and emotional support.

Introduction: The Role of Emotions in Human-Robot Interaction (HRI)

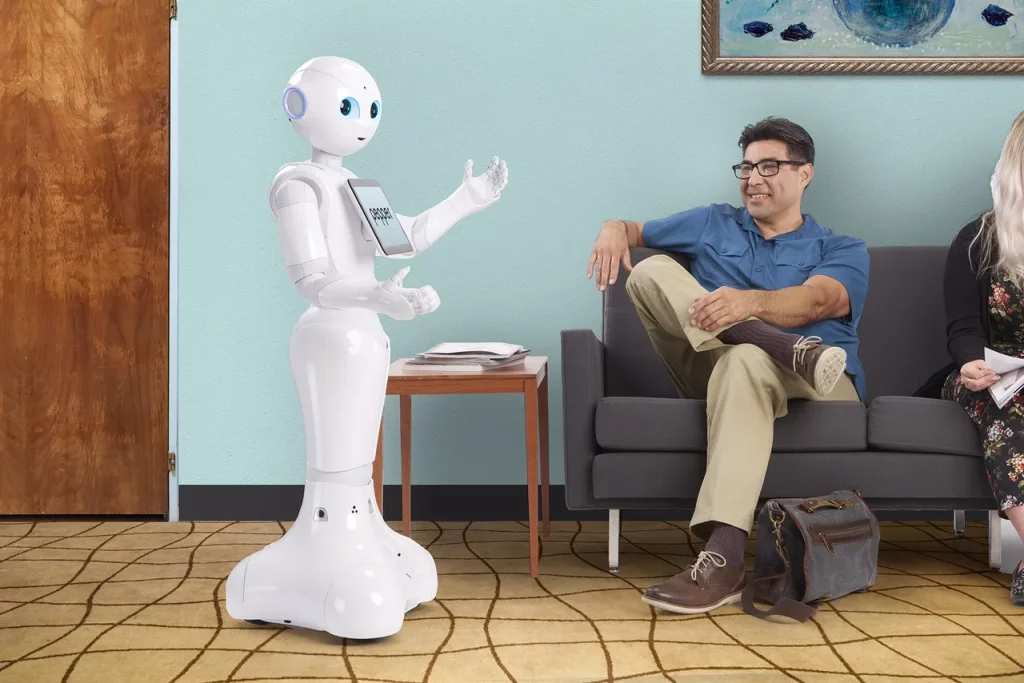

Human emotions play a central role in guiding behavior, decision-making, and communication. In human-robot interactions (HRI), the ability of robots to recognize, understand, and respond to human emotions allows them to engage with users in ways that feel more natural, empathetic, and beneficial. The traditional view of robots as cold, logical machines is evolving. Instead, robots are now being designed with emotional intelligence, capable of interpreting emotional cues from humans and adapting their behavior accordingly.

The importance of emotional intelligence in robots is particularly evident in areas where human-robot interaction goes beyond simple task completion. In healthcare, for example, robots that understand and respond to the emotional states of patients can provide better support, offer companionship, and even enhance the therapeutic process. Similarly, in customer service or education, emotionally intelligent robots can create more personalized, engaging experiences for users. As robots increasingly become part of our daily lives, their ability to simulate and respond to human emotions will be a key factor in how they are integrated into social and professional settings.

The Science of Emotional Understanding in Robots

- Emotion Recognition: The First Step to Empathy

The foundation of emotional intelligence in robots lies in their ability to recognize human emotions. Emotion recognition is the process by which robots detect emotional signals from human behaviors, such as facial expressions, voice tone, body language, and physiological responses. These signals provide crucial insights into a person’s emotional state, allowing robots to tailor their actions and responses accordingly.

Key Components of Emotion Recognition in Robots:

- Facial Expression Analysis

Facial expressions are among the most prominent ways humans express emotions. Using computer vision and machine learning algorithms, robots can analyze facial features, such as mouth curvature, eye movement, and eyebrow positioning, to detect emotions like happiness, sadness, anger, surprise, and fear. Advanced algorithms, especially convolutional neural networks (CNNs), enable robots to process and interpret facial data in real-time with impressive accuracy. - Speech and Voice Tone Analysis

The tone of voice, pitch, cadence, and volume of speech convey important emotional information. Speech emotion recognition (SER) technology allows robots to analyze these auditory cues, enabling them to detect emotions such as joy, sadness, anger, and frustration. This method often uses machine learning models that are trained on large datasets of speech samples, teaching robots to associate specific vocal patterns with particular emotions. - Body Language and Gesture Recognition

In addition to facial and vocal cues, humans express emotions through body language. Robots equipped with sensors or cameras can analyze posture, gestures, and physical movement to detect emotions. For instance, crossed arms might signal defensiveness, while a slouched posture could indicate sadness or fatigue. - Physiological Monitoring

Some robots can also measure physiological indicators, such as heart rate, temperature, and skin conductance, which can offer further insight into a person’s emotional state. For example, a robot might detect elevated heart rate and skin conductance levels to recognize stress or anxiety.

By using a combination of these methods, robots can achieve a robust understanding of human emotional states, paving the way for more empathetic and responsive interactions.

- Understanding Emotions: Cognitive and Affective Processing

Once a robot has recognized emotional cues, it must then process and understand the underlying emotions. This requires cognitive and affective processing systems that allow the robot to make sense of the emotional data and decide on the most appropriate response.

- Cognitive Processing

Cognitive processing involves the robot’s ability to interpret the emotional context of a situation. For example, if a robot detects that a person is angry, it must consider the context—whether the anger is due to frustration with a task, a social interaction, or a personal situation—and decide how to respond accordingly. Cognitive models such as decision trees, rule-based systems, and neural networks are often used to interpret the emotional context and make informed decisions on how to engage. - Affective Processing

Affective processing refers to the robot’s ability to simulate emotions and integrate these emotions into its behavior. By using algorithms that mimic emotional states, robots can decide how to express empathy, excitement, sadness, or joy, based on their understanding of the user’s emotional state. Affective processing allows robots to engage in more natural interactions, where the robot responds in a manner that reflects a human-like understanding of emotions.

- Emotional Memory and Learning: Adapting to User Needs

One of the key factors in creating emotionally intelligent robots is their ability to learn and adapt over time. Through machine learning, robots can improve their ability to recognize and respond to human emotions based on past experiences. Emotional memory allows robots to recall previous interactions and adjust their behavior based on learned patterns.

For instance, if a robot interacts with a user who often expresses frustration during certain tasks, it can remember this emotional response and adapt its approach accordingly. Over time, the robot might begin to offer assistance more proactively when it detects signs of frustration or provide comforting responses when stress levels are high. This adaptive learning helps robots become more attuned to the unique emotional needs of each individual.

How Robots Respond to Human Emotions

- Emotional Feedback: Responding with Empathy

One of the most important aspects of human-robot emotional interaction is the robot’s ability to respond empathetically to the emotions of the user. Empathy involves recognizing another person’s emotional state and responding in a way that acknowledges and supports that state.

- Verbal Responses

When a robot detects a particular emotion, it can respond verbally, offering comforting or encouraging words. For example, if a person expresses sadness, the robot might say, “I’m sorry you’re feeling down. Is there anything I can do to help?” or offer a light-hearted joke if the person is feeling down in a less serious manner. - Non-verbal Responses

In addition to verbal communication, robots can also respond non-verbally. For instance, a robot might change its body posture to appear more approachable, offer a smile, or even engage in soothing gestures, such as nodding or tilting its head. These non-verbal cues are crucial for building rapport and trust between robots and users, as they make the robot seem more human-like and empathetic.

- Adaptive Responses: Tailoring Interactions

Robots can adjust their responses based on the specific emotional context, tailoring their behavior to the user’s emotional needs. For example:

- Comforting Responses for Sadness

If a robot detects sadness, it can offer words of comfort or engage in calming activities. In a healthcare setting, a robot might play soft music or offer relaxation exercises to reduce stress levels. In customer service, the robot may acknowledge frustration and work to resolve the issue efficiently. - Supportive Responses for Stress or Anxiety

When faced with stress or anxiety, robots can provide emotional support by suggesting relaxation techniques or offering a listening ear. In therapeutic settings, robots are often used as companions to help individuals manage their anxiety or emotional challenges, offering gentle reminders or calming dialogues. - Encouraging Responses for Positive Emotions

If the robot detects happiness, excitement, or joy, it can join in the positive emotion by responding enthusiastically, offering congratulations, or even celebrating milestones with the user. For instance, if a person successfully completes a task or reaches a goal, the robot can cheer them on, reinforcing positive emotional connections.

Simulating Emotions: How Robots Display Emotional Responses

Beyond understanding and responding to human emotions, robots can also simulate emotions to make interactions feel more genuine. This involves replicating emotional expressions through verbal and non-verbal means, creating an emotional atmosphere that enhances the user experience.

- Simulating Emotions Through Facial Expressions

Robots can be equipped with facial actuators, screens, or other technologies to simulate human-like facial expressions. A robot might smile, frown, or raise its eyebrows to show happiness, sadness, or surprise, respectively. These facial expressions are designed to make the robot appear more engaging and relatable. - Voice Modulation

Voice modulation is another way robots simulate emotions. By altering their pitch, tone, and rhythm, robots can mimic emotional states like excitement, warmth, sadness, or calm. This modulation creates a more engaging experience, as users perceive the robot as being emotionally responsive and invested in the interaction. - Body Language Simulation

Robots can also simulate emotions through body movements. For example, a robot might lean forward to express interest or shift its weight to convey empathy. These movements enhance the overall emotional experience by making the robot seem more interactive and alive, rather than static or mechanical.

Applications of Emotionally Intelligent Robots

- Healthcare

In healthcare, emotionally intelligent robots can assist in patient care, provide companionship, and help manage stress and anxiety. Robots designed for elderly care, for instance, can recognize when a patient is feeling lonely or anxious and respond with comforting actions. Additionally, they can offer medication reminders, monitor vital signs, and provide cognitive therapy exercises. - Customer Service

In customer service, robots that can understand and respond to customer emotions can improve satisfaction and build better relationships. For example, a robot at an airport or hotel might detect customer frustration and respond with empathy, apologizing for delays and offering helpful information to alleviate stress. - Education

Robots in education can tailor their teaching approach based on the emotional state of students. For example, if a student expresses frustration during a lesson, the robot can offer encouragement or change the pace of the lesson to match the student’s emotional state, thereby creating a more personalized learning experience. - Companionship and Therapy

Robots that simulate and respond to human emotions can provide companionship and support for individuals who are isolated or in need of emotional assistance. This is particularly valuable for individuals with mental health challenges or those on the autism spectrum, where social cues can be difficult to interpret.

Challenges and Ethical Considerations

- Authenticity vs. Simulation

A key challenge in emotional robots is distinguishing between true emotional understanding and simple simulation. Robots may be able to simulate emotions convincingly, but can they genuinely experience or understand emotions in the same way humans do? It is important to manage user expectations and ensure transparency about the capabilities and limitations of emotional robots. - Privacy and Data Security

Emotion recognition systems often rely on sensitive personal data, such as facial expressions and voice recordings. Ensuring the privacy and security of this data is critical to avoid misuse or exploitation. Ethical guidelines and regulations must be in place to protect users’ emotional data. - Over-reliance on Robots

While emotionally intelligent robots can provide valuable companionship, there is a risk that people may become overly dependent on them, potentially reducing human-to-human interactions. Balancing robot use with real human relationships is essential to maintain emotional health.

Conclusion

Robots capable of understanding, responding to, and simulating human emotions are revolutionizing human-robot interactions, enhancing the quality and depth of these engagements. Whether in healthcare, education, customer service, or companionship, robots that can recognize and respond to emotions provide more intuitive, empathetic, and meaningful interactions. By integrating emotional intelligence into robotic systems, researchers are creating robots that are not only task-efficient but also emotionally engaging, offering a new frontier in human-robot collaboration. However, challenges related to authenticity, privacy, and over-reliance must be addressed to ensure that emotionally intelligent robots are used ethically and responsibly. As these technologies continue to evolve, the potential for emotionally intelligent robots to enhance human experiences is limitless.