The field of robotics has undergone a monumental transformation over the last few decades, largely due to advances in artificial intelligence (AI), machine learning (ML), and computer vision technologies. At the heart of this evolution is the ability of robots to “see” and understand their environments in ways that were once limited to human perception. Computer vision has empowered robots to interpret visual data, allowing them to make intelligent decisions and interact with the world more effectively. This article explores how computer vision technology enables robots to perceive their surroundings, the core principles behind it, its applications, challenges, and the future of visual perception in robotics.

Introduction: The Significance of Computer Vision in Robotics

In traditional robotics, the interaction between a machine and its environment was relatively simplistic. Robots were programmed with predefined instructions, and their actions were dictated by these hard-coded commands. However, as robotics applications expanded, particularly in environments that require a nuanced understanding of dynamic scenarios—such as autonomous vehicles, healthcare robots, and industrial automation—robots needed more advanced capabilities. The need for robots to see and respond to the world around them became apparent.

Computer vision technology, which enables machines to process and understand visual information, has emerged as the key to providing this capability. Just as human vision allows us to interpret and navigate the world, computer vision allows robots to analyze and interpret visual data, making decisions based on what they “see.” This ability is essential for robots that operate in real-world environments, where context, object recognition, depth perception, and real-time decision-making are critical.

How Computer Vision Works in Robotics

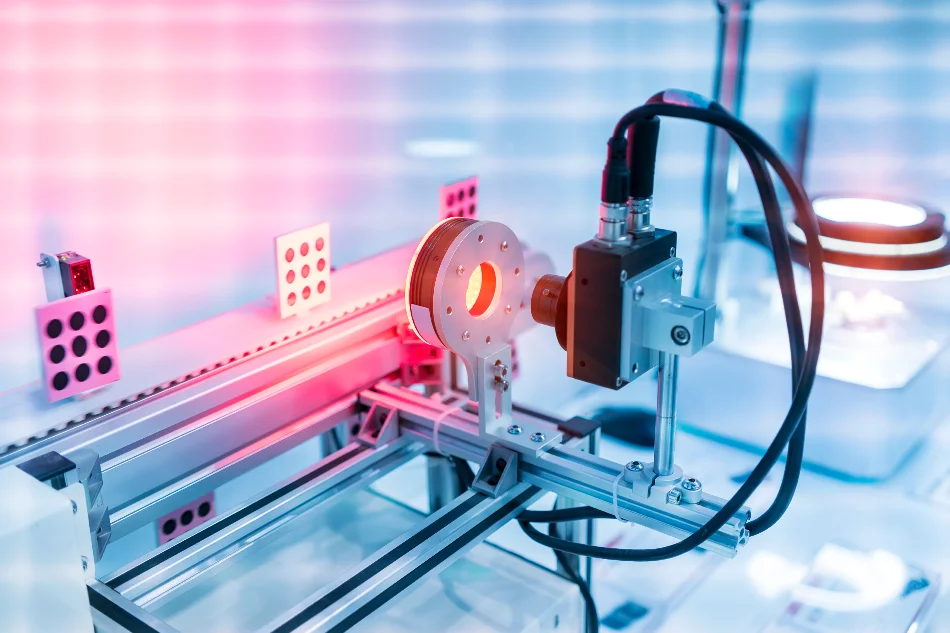

At its core, computer vision in robotics involves a combination of hardware (such as cameras and sensors) and software (algorithms and models) that allows a robot to extract meaning from visual data. Below is an outline of how computer vision works in a typical robotic system:

1. Image Acquisition

The first step in the computer vision process is image acquisition. Robots are equipped with cameras, sensors, or depth sensors to capture visual data of their surroundings. The type of sensor used depends on the application and the level of detail required. Some common types include:

- RGB Cameras: Standard color cameras capture images in red, green, and blue, simulating human vision.

- Stereo Cameras: These cameras use two lenses to capture images from different viewpoints, enabling depth perception.

- LiDAR Sensors: Light Detection and Ranging (LiDAR) uses lasers to measure distances and create 3D maps of the environment.

- Infrared Cameras: These cameras detect heat signatures and can be used in low-light or challenging visual conditions.

2. Image Processing

Once the data is captured, it must be processed to enhance its quality and prepare it for analysis. Image processing includes techniques such as:

- Noise Reduction: Removing unwanted data or interference that could distort the image.

- Edge Detection: Identifying the boundaries of objects within the image to isolate them from the background.

- Image Enhancement: Adjusting contrast, brightness, and sharpness to make key features more prominent.

- Segmentation: Dividing the image into regions of interest or distinct objects, simplifying the process of recognition and analysis.

3. Feature Extraction

After the image is processed, the next step is feature extraction. In this phase, the algorithm identifies significant elements or features in the image that are crucial for object recognition and decision-making. These features could include:

- Edges and Corners: Identifying the outline of objects.

- Textures: Recognizing patterns or surface characteristics that distinguish objects.

- Shapes: Detecting specific geometric structures like circles, squares, and triangles.

- Color Patterns: Identifying colors or gradients in the image that can help classify objects.

4. Object Recognition and Decision-Making

The final stage of computer vision involves recognizing and classifying objects, followed by making decisions based on the recognized information. Object recognition uses algorithms like Convolutional Neural Networks (CNNs) or Support Vector Machines (SVMs) to identify objects based on their features. Once the objects are identified, robots can perform tasks such as:

- Object manipulation: Picking up or moving objects.

- Path planning: Determining the optimal route for the robot based on the objects detected.

- Action execution: Carrying out actions based on the understanding of the environment (e.g., avoiding obstacles or interacting with humans).

Machine learning models, especially deep learning techniques, play a significant role in improving the accuracy and flexibility of object recognition. By training on vast datasets, these models enable robots to recognize a variety of objects in different environments, making them highly adaptable.

Applications of Computer Vision in Robotics

The integration of computer vision into robotics has led to remarkable improvements in performance, enabling robots to perform more complex tasks in a variety of industries. Below are some of the most impactful applications of computer vision in robotics.

1. Autonomous Vehicles

Self-driving cars rely on computer vision to navigate and make decisions on the road. These vehicles use a combination of cameras, LiDAR, radar, and other sensors to interpret their surroundings. Computer vision algorithms help the car identify pedestrians, other vehicles, road signs, and lane markings in real time. Key computer vision tasks in autonomous driving include:

- Object Detection: Identifying and tracking pedestrians, cyclists, and vehicles.

- Lane Detection: Recognizing road lanes and ensuring the vehicle stays within them.

- Traffic Signal Recognition: Detecting traffic signals and interpreting their meaning to make driving decisions.

The successful integration of computer vision in autonomous vehicles is crucial for their safety and efficiency. It allows these vehicles to operate without human intervention, making transportation safer and more efficient.

2. Industrial Automation

In manufacturing and industrial environments, robots equipped with computer vision systems have become indispensable tools for improving production efficiency and quality control. These robots can visually inspect products, pick and place components, and collaborate with humans in a shared workspace. Some key applications include:

- Visual Inspection: Robots can detect defects in products, such as faulty parts or incorrect assembly, improving quality control.

- Assembly Tasks: Robots can recognize and handle parts of various shapes and sizes, assisting in assembly processes where precision is required.

- Collaborative Robots (Cobots): Robots that work alongside humans can use computer vision to identify and respond to human actions, ensuring safety and efficiency.

3. Healthcare Robotics

In healthcare, robotic systems equipped with computer vision are revolutionizing surgeries, diagnostics, and patient care. Surgical robots use vision systems to assist surgeons in performing complex procedures with high precision. Key applications include:

- Medical Imaging: Computer vision is used to analyze medical images, such as CT scans, X-rays, and MRIs, to detect abnormalities like tumors or fractures.

- Surgical Assistance: Robotic surgical systems use computer vision to provide real-time feedback to surgeons, guiding tools and instruments with exceptional accuracy.

- Patient Monitoring: Robots can monitor patients in a hospital or home setting, tracking vital signs and detecting abnormalities through visual cues.

The integration of computer vision in healthcare robotics not only improves the success rate of surgeries but also enhances patient safety and comfort.

4. Service Robots and Human-Robot Interaction

Service robots, such as those found in hotels, retail stores, or homes, use computer vision to interact with people and perform tasks. These robots can recognize faces, detect gestures, and interpret human emotions, allowing for more intuitive and responsive interactions. Key applications include:

- Face and Emotion Recognition: Robots can identify individuals and even gauge their emotional state, enabling more personalized and empathetic interactions.

- Gesture Recognition: Robots can interpret human gestures and respond accordingly, making them more intuitive to interact with.

- Human-Robot Collaboration: In environments like hospitals or factories, robots can collaborate with humans, using vision systems to adapt to the human operator’s actions.

5. Agriculture and Environmental Monitoring

Robots used in agriculture rely heavily on computer vision to monitor crop health, detect pests, and optimize farming operations. These robots can assess large fields, identify specific crops, and even perform tasks like harvesting or pruning. Applications include:

- Crop Health Monitoring: Robots equipped with vision systems can identify signs of disease or pest infestation, allowing farmers to address issues early.

- Precision Farming: By analyzing visual data from crops, robots can apply pesticides, water, and nutrients precisely where needed, reducing waste and improving yields.

- Environmental Monitoring: Robots can monitor environmental conditions, such as air and water quality, using vision and sensor data to track changes and detect pollutants.

Challenges in Computer Vision for Robotics

While computer vision has unlocked new possibilities for robotics, several challenges still need to be addressed for its widespread adoption and optimization. Some of these challenges include:

1. Real-Time Processing

Processing large amounts of visual data in real time is a significant challenge, especially in dynamic environments. Robots need to analyze and make decisions quickly, which requires highly efficient algorithms and powerful computing hardware. Current technologies such as Edge Computing and GPUs (Graphics Processing Units) are helping to overcome this challenge by enabling faster data processing and decision-making.

2. Environmental Variability

Real-world environments are unpredictable, and computer vision systems must be able to handle different lighting conditions, weather changes, and physical obstructions. For example, a robot navigating outdoors might face poor visibility due to fog or intense sunlight. Algorithms must be robust enough to handle these variables and still make accurate decisions.

3. Object Recognition and Generalization

While object recognition algorithms have made significant strides, challenges remain in generalizing across diverse environments. A robot trained to recognize objects in one setting might struggle to identify them in a different environment with different lighting, background noise, or clutter. To address this, researchers are developing more generalized models that can adapt to new environments with minimal retraining.

4. Ethical and Privacy Concerns

As robots equipped with computer vision systems become more integrated into daily life, issues related to privacy and ethics arise. Surveillance, facial recognition, and data collection could potentially infringe on personal privacy. Developers and policymakers must ensure that computer vision technologies are used responsibly, with appropriate safeguards in place to protect privacy and security.

The Future of Computer Vision in Robotics

The future of computer vision in robotics is incredibly promising, with ongoing advancements in deep learning, hardware, and sensor technology driving new innovations. Some key areas of future development include:

- Autonomous Navigation: Robots will continue to improve their ability to navigate complex environments autonomously, even in challenging and unpredictable settings.

- Human-Robot Interaction: Advances in emotion and gesture recognition will make interactions between robots and humans more natural and intuitive.

- Multi-Sensor Fusion: The combination of visual data with other sensors like LiDAR, radar, and haptic feedback will create even more robust robotic systems capable of performing a wider range of tasks.

As computer vision technology continues to evolve, the boundaries of what robots can accomplish will expand, bringing us closer to fully autonomous machines that can seamlessly integrate into our everyday lives.

Conclusion

Computer vision has emerged as one of the most transformative technologies in the field of robotics. By enabling robots to see and understand their surroundings, computer vision allows them to interact with the environment intelligently and autonomously. From autonomous vehicles to healthcare robots and industrial automation, the impact of computer vision is undeniable. While there are still challenges to overcome, the continued advancements in this field hold the potential to revolutionize robotics and reshape industries worldwide.