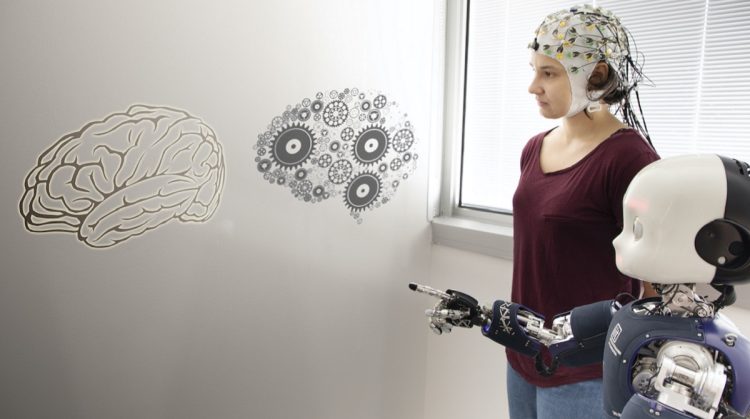

In recent years, the field of robotics has witnessed remarkable advancements in the way robots interact with humans. Traditionally, robots have been designed as task-oriented machines with limited or no emotional capabilities. However, the growing interest in making robots more empathetic and socially intelligent has led to the development of affective computing technologies—systems that allow robots to recognize, understand, and even respond emotionally to human emotions. By integrating these technologies, robots can go beyond performing tasks to engaging in meaningful, emotionally intelligent interactions with humans, enhancing the overall user experience.

Affective computing is a multidisciplinary field that combines computer science, psychology, cognitive science, and artificial intelligence to enable machines to detect and simulate human emotions. This article explores how affective computing technology is revolutionizing the world of robotics, allowing robots to recognize emotional cues and generate emotional responses that improve human-robot interaction (HRI). We will delve into the key technologies behind affective computing, its applications in robotics, and the challenges and ethical considerations associated with the integration of emotional intelligence in machines.

Introduction: The Evolution of Emotional Robots

Historically, robots have been designed to perform well-defined tasks with precision and efficiency. However, as robots become more integrated into daily human life, particularly in fields like healthcare, education, and customer service, there is an increasing demand for robots to be more than just functional tools. Human-robot interaction is a critical aspect of this evolution, as it influences how users perceive and trust robots.

Humans are inherently social creatures, and much of our daily communication involves emotional expression. When robots are able to recognize and respond to emotional signals, the interaction becomes more natural and effective. Affective computing aims to equip robots with the ability to detect emotions through various cues—such as facial expressions, voice tone, and body language—and to respond in ways that are contextually appropriate, whether by offering comfort, encouragement, or simply adapting to the user’s mood.

This shift from task-focused to emotionally aware robots opens up numerous possibilities. In healthcare, emotionally intelligent robots could provide better care and emotional support to patients. In customer service, they could enhance user satisfaction by offering tailored interactions. As robots evolve to be more socially intelligent, they may even become companions for the elderly or individuals with special needs, offering not only functional support but also emotional companionship.

What is Affective Computing?

Affective computing, coined by Rosalind Picard in the 1990s, refers to the design of systems and devices that can recognize, interpret, simulate, and respond to human emotions. The field draws from several disciplines, including psychology, cognitive science, and artificial intelligence (AI), and focuses on understanding how emotional states influence human behavior and communication.

Key Components of Affective Computing:

- Emotion Recognition: The first step in affective computing is the ability to identify emotional signals. This can be achieved through various sensors and algorithms that analyze facial expressions, speech patterns, physiological signals, and even text input to detect emotional states like happiness, sadness, anger, fear, and surprise.

- Emotion Simulation: After recognizing emotions, robots can simulate emotional expressions using mechanisms like facial displays, voice modulation, and body language. This simulation makes the robot appear more empathetic and human-like.

- Emotion Response: Finally, robots can respond to emotional cues by tailoring their actions to the user’s emotional state. For example, if a user is upset, the robot may provide comforting words or calming actions to alleviate stress. On the other hand, if a user is happy, the robot might celebrate or encourage the user, fostering a positive interaction.

These capabilities are made possible by sophisticated machine learning algorithms, sensor technologies, and real-time processing systems that enable robots to adapt their behavior in dynamic environments based on emotional feedback.

The Technologies Behind Emotion Recognition in Robots

Affective computing relies heavily on various technologies that allow robots to detect and interpret emotional cues from humans. Below are some of the key technologies used in emotion recognition:

1. Facial Expression Analysis

Facial expressions are one of the most powerful indicators of human emotions. The muscles in the face form expressions that can convey a wide range of emotions. Through computer vision and deep learning algorithms, robots can analyze these expressions to identify the emotional state of a person.

- Computer Vision and Deep Learning: Using convolutional neural networks (CNNs), robots can process images of human faces to detect facial landmarks and analyze subtle changes in facial features, such as the mouth, eyes, and eyebrows. These changes are then mapped to specific emotions.

- Emotion Detection Databases: Facial emotion recognition systems are often trained using large datasets of labeled facial expressions, such as the FER-2013 dataset, which contains thousands of images of faces displaying various emotions.

2. Speech and Voice Analysis

The tone, pitch, cadence, and volume of speech also carry significant emotional information. Voice recognition algorithms can analyze these features to detect the emotional state of a speaker.

- Speech Emotion Recognition (SER): Speech emotion recognition involves using machine learning algorithms to identify emotions from speech. Features such as the speed of speech, intonation, and pauses are analyzed to detect emotions like anger, joy, sadness, or fear.

- Acoustic Signal Processing: This technology processes the acoustic features of speech, such as pitch variation, loudness, and speech rate, to identify emotional cues.

3. Physiological Signals

In addition to facial expressions and voice, physiological signals such as heart rate, skin conductance, and body temperature provide valuable emotional data. These signals are typically monitored using sensors placed on the user or embedded within the robot.

- Heart Rate Variability (HRV): HRV can be used to detect stress or relaxation by measuring fluctuations in heart rate. A robot that detects elevated heart rate could infer that a person is anxious or stressed.

- Galvanic Skin Response (GSR): GSR sensors measure skin conductivity, which changes with sweat production and can be used to detect emotional arousal, particularly anxiety or excitement.

4. Text-Based Emotion Detection

Natural language processing (NLP) can be used to detect emotions in text-based communication. Sentiment analysis algorithms analyze the words, phrases, and tone of written language to determine the emotional state of the writer.

- Text Mining and Sentiment Analysis: Robots can analyze written input from users—such as chat messages or social media posts—to detect emotional tones. For example, if a user writes “I feel terrible,” the robot can recognize the negative sentiment and respond empathetically.

Generating Emotional Responses: How Robots Simulate Emotions

Once a robot recognizes the emotions of a human user, it must simulate appropriate emotional responses. This can be achieved through various methods that involve both verbal and non-verbal cues.

1. Facial Expression Simulation

Robots can display a wide range of emotions through facial expressions. These expressions are often generated using robotic faces equipped with actuators or digital displays.

- Robotic Faces: Some robots, such as social robots, use facial actuators to manipulate the appearance of their faces, mimicking human expressions like smiling, frowning, or raising eyebrows. These facial expressions help the robot appear more emotionally responsive.

- Digital Displays: In other cases, robots with digital screens can simulate emotional expressions by changing the facial images shown on the screen, offering a visually dynamic response to human emotions.

2. Voice Modulation

Voice modulation allows robots to simulate emotions through changes in pitch, volume, and speech rate. A robot can speak in a soothing tone when comforting someone or use a more excited tone when congratulating them.

- Prosody Adjustment: This involves changing the rhythm, stress, and intonation of speech to match the emotion the robot is trying to convey. A high-pitched voice with a fast pace might indicate excitement, while a slower, softer voice can signal empathy or calmness.

3. Body Language and Gestures

Non-verbal communication is crucial in human interactions, and robots can simulate emotions by altering their body language and movements.

- Posture and Movement: Robots can use their body posture to express emotions. For example, they may lean forward to show interest or empathy, or they may display a relaxed posture to convey calmness.

- Gestures: Robots can also use hand gestures, nodding, or tilting their heads to further simulate human-like emotional responses. These gestures are typically designed to make the interaction feel more authentic and emotionally engaging.

Applications of Emotionally Intelligent Robots

The ability for robots to recognize and respond to emotions opens up a variety of applications across multiple domains. Some of the most notable applications include:

1. Healthcare

Emotionally intelligent robots can play a significant role in healthcare, particularly in patient care, therapy, and elderly support.

- Companionship for the Elderly: Robots can provide emotional support to elderly individuals, particularly those suffering from conditions like dementia or Alzheimer’s. These robots can engage in conversations, provide reminders, and offer companionship, reducing feelings of loneliness.

- Therapeutic Robots: In therapeutic settings, robots can be used to assist patients with emotional regulation, help with mental health treatment, or provide stress relief through relaxation techniques.

2. Customer Service

In customer service, robots with emotional intelligence can improve user experiences by providing more personalized, empathetic interactions.

- Emotional Engagement: Robots in retail or hospitality environments can recognize customer frustration and offer calming responses or resolve issues more quickly, enhancing customer satisfaction.

- Tailored Services: Robots can also adjust their responses based on the emotional tone of the customer, making them feel heard and understood.

3. Education

Robots in education can respond to students’ emotional states, adjusting their teaching methods or providing emotional support when needed.

- Personalized Learning: Robots can detect when students are frustrated or bored and adapt their teaching style to match the student’s emotional state, improving engagement and learning outcomes.

- Social Skills Development: Social robots can help children, particularly those with autism spectrum disorder (ASD), practice emotional recognition and social interactions in a safe and controlled environment.

Challenges and Ethical Considerations

Despite the tremendous potential of emotionally intelligent robots, there are several challenges and ethical concerns that need to be addressed:

1. Authenticity of Emotional Responses

One of the key concerns is whether robots can truly understand emotions or if they are simply mimicking emotional responses. While robots may appear to express emotions convincingly, they lack the genuine experience of emotion, which could lead to ethical issues regarding transparency and user trust.

2. Privacy and Data Security

Emotion recognition systems rely on collecting sensitive data, such as facial expressions, speech patterns, and physiological signals. Ensuring that this data is securely stored and not misused is critical to maintaining user trust and privacy.

3. Dependence on Robots for Emotional Support

As robots become more emotionally intelligent, there is a risk that individuals might become overly dependent on robots for companionship and emotional support. It is important to strike a balance between robotic interaction and human relationships to avoid social isolation.

Conclusion

The integration of affective computing technology in robots represents a significant breakthrough in human-robot interaction. By enabling robots to not only recognize emotions but also generate appropriate emotional responses, we open the door to a wide range of applications that can enhance human life across multiple domains. While the development of emotionally intelligent robots brings numerous benefits, it also requires careful consideration of ethical concerns, including authenticity, privacy, and dependency. As research in affective computing continues to advance, the potential for robots to become valuable, empathetic companions in various contexts grows, promising a future where robots and humans can interact on a much deeper emotional level.