In the world of robotics, perception is fundamental for performing tasks autonomously and intelligently. To enable robots to interact with and navigate through the real world, sensors are needed to provide critical information about the surrounding environment. Among these sensors, visual sensors such as cameras and LiDAR (Light Detection and Ranging) play a pivotal role. These sensors allow robots to gather both image and depth information, helping them make informed decisions about object recognition, obstacle avoidance, and navigation.

In this article, we will explore the importance of visual sensors, particularly cameras and LiDAR, in the development of autonomous robotic systems. We will discuss how these sensors capture environmental data, how they complement each other, their applications, challenges, and the future of visual sensing in robotics.

Introduction: The Role of Visual Sensors in Robotics

Robots are increasingly being required to operate autonomously in dynamic, unpredictable, and often complex environments. This includes tasks such as:

- Autonomous navigation in unknown or changing environments (e.g., robots navigating a factory floor, self-driving cars on the road, or drones flying through a cityscape).

- Object recognition and classification for picking, sorting, and manipulation tasks in industrial automation.

- Human-robot interaction in service robots, assistive robots, and healthcare applications.

To achieve these capabilities, robots must “see” and understand their surroundings. While various types of sensors (such as ultrasonic sensors, radar, and force sensors) provide valuable data about the environment, visual sensors—namely cameras and LiDAR—are particularly effective in capturing rich, high-fidelity data that allows for detailed understanding and decision-making.

Why Visual Sensors Matter?

Visual sensors offer a wealth of information that goes beyond simple proximity sensing. While other sensors (e.g., radar or ultrasonic sensors) may detect objects, cameras and LiDAR provide robots with both spatial and contextual understanding of their surroundings, which is essential for performing complex tasks such as:

- Obstacle avoidance: Identifying and reacting to obstacles in real-time.

- 3D mapping: Constructing accurate models of environments, which is crucial for path planning and navigation.

- Object recognition: Classifying objects based on appearance, size, and shape, which is vital for tasks like assembly, sorting, or object manipulation.

Thus, combining vision sensors with artificial intelligence enables robots to perform sophisticated tasks that would be impossible using other forms of sensing alone.

1. Cameras: The Foundation of Robotic Vision

Cameras are arguably the most common form of visual sensor used in robotics. They are similar to human eyes in that they capture images or video footage of the environment, which can then be processed by algorithms for object detection, recognition, and scene understanding.

Types of Cameras Used in Robotics:

- Monocular Cameras: A single camera that captures a 2D image of the environment. Monocular cameras are affordable and widely used in various applications. They are often used in robots that need to perform basic tasks like visual feedback for control or simple object detection.

- Stereo Cameras: These systems use two or more cameras placed at slightly different angles to mimic human depth perception. The difference in perspective between the cameras allows for the creation of a 3D map of the environment, which is invaluable for tasks requiring depth perception, such as navigation or obstacle avoidance.

- RGB-D Cameras: These cameras combine a standard color image (RGB) with depth information (D), allowing for both visual recognition and depth sensing. Popular examples include the Microsoft Kinect and Intel RealSense cameras, which are widely used in both academic research and industrial applications.

Applications of Cameras in Robotics:

- Object Detection and Classification: Cameras enable robots to detect and identify objects within their environment. By processing visual data using machine learning algorithms, robots can classify objects based on color, texture, and shape.

- Visual SLAM (Simultaneous Localization and Mapping): Cameras are crucial for SLAM, a technique that allows robots to build and update a map of an unknown environment while simultaneously tracking their location within that map. This is essential for autonomous navigation.

- Human-Robot Interaction: Cameras help robots recognize human gestures, facial expressions, and other social cues, allowing for more intuitive and effective interaction between humans and robots.

Limitations of Cameras:

- Lighting Dependence: Cameras are highly sensitive to lighting conditions. Low-light environments or bright, direct lighting can distort the quality of the captured image, affecting the accuracy of object recognition and scene understanding.

- Occlusions: Objects may be partially obscured or blocked by other objects, making it difficult for cameras to detect or identify them.

- Limited Depth Information: A single camera only provides 2D images, and obtaining accurate depth information can be challenging. While stereo or depth cameras can provide 3D data, it may not always be precise enough for tasks requiring high accuracy.

2. LiDAR: Adding Depth to Vision

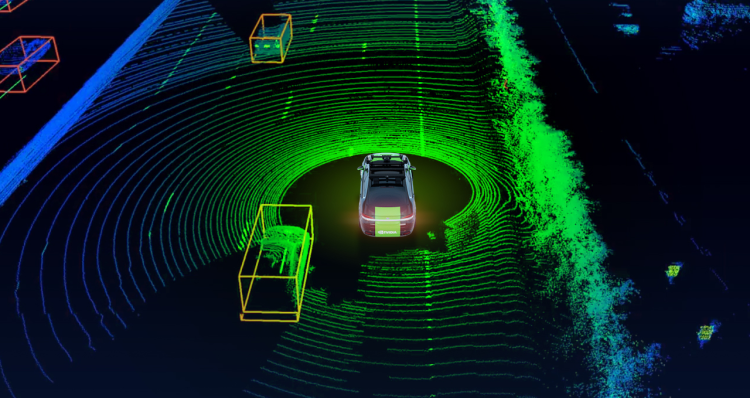

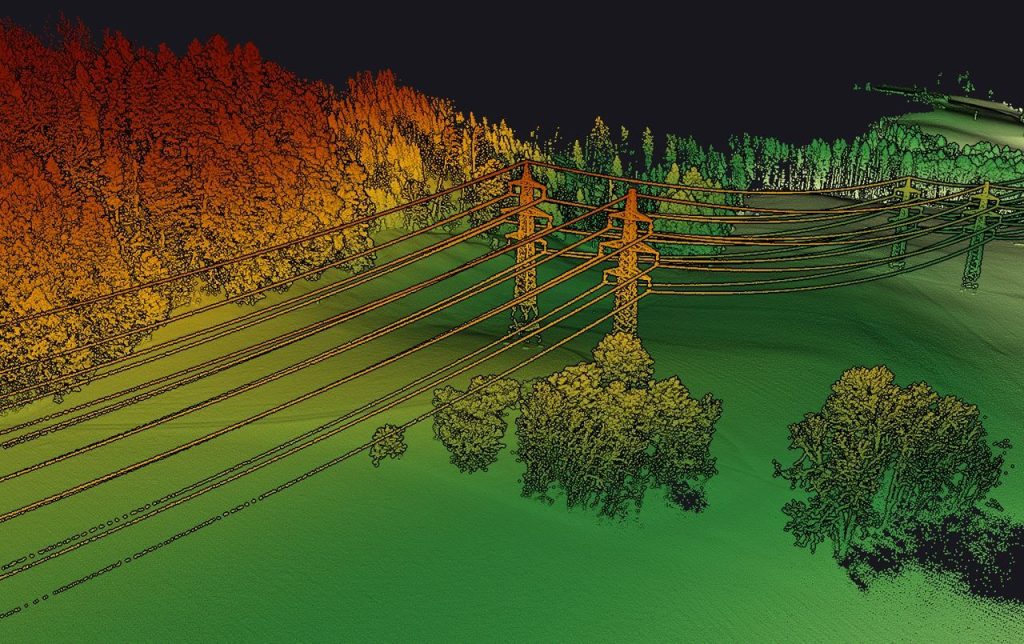

LiDAR is a powerful technology that uses laser beams to measure distances to objects and generate precise, 3D point cloud data of the environment. Unlike cameras, which capture images based on visible light, LiDAR operates by emitting laser pulses and analyzing the time it takes for the pulses to reflect back from objects. This enables LiDAR to provide highly accurate depth information, even in challenging environments.

Working Principle of LiDAR:

LiDAR systems emit laser beams in multiple directions, and by measuring the time it takes for each pulse to return after hitting an object, they can calculate the precise distance to that object. These measurements are then used to create a 3D map of the environment, which can be visualized as a point cloud.

Applications of LiDAR in Robotics:

- 3D Mapping and Modeling: LiDAR is invaluable in creating highly accurate 3D maps of complex environments. In autonomous vehicles, for example, LiDAR generates detailed maps of the road, including precise information about the location of lanes, obstacles, and traffic signs.

- Obstacle Detection and Avoidance: LiDAR’s high precision and ability to operate in various lighting conditions make it excellent for detecting obstacles in the environment. This is crucial for robots that need to navigate through cluttered or dynamic spaces.

- Autonomous Navigation: LiDAR is widely used in autonomous vehicles and drones for real-time mapping and navigation. By combining LiDAR data with visual sensors like cameras, robots can accurately navigate and make decisions about their path in real-time.

Advantages of LiDAR:

- High Precision: LiDAR can create highly detailed 3D maps with millimeter-level accuracy, which is critical for tasks such as collision avoidance and navigation.

- Works in Low Light: Unlike cameras, LiDAR works effectively in low-light or no-light conditions, making it ideal for environments where visibility may be compromised.

- Long Range: LiDAR systems can detect objects at long ranges (typically up to 100 meters or more), which is useful for applications such as autonomous vehicles and drones operating in open spaces.

Challenges of LiDAR:

- Cost: LiDAR systems can be expensive, particularly high-resolution models. This has limited their widespread adoption in some robotics applications.

- Limited Object Recognition: LiDAR can accurately map objects and measure distances, but it may struggle with recognizing the specific characteristics or identities of objects, which cameras can do more effectively.

- Weather Sensitivity: LiDAR can be affected by adverse weather conditions like rain, fog, or snow, which may cause the laser pulses to scatter, resulting in inaccurate data.

3. Combining Cameras and LiDAR: The Power of Sensor Fusion

While both cameras and LiDAR have distinct advantages, their integration creates a more comprehensive perception system for robots. By combining the high precision of LiDAR’s 3D point clouds with the rich texture and object recognition capabilities of cameras, robots can achieve enhanced spatial awareness and environmental understanding.

Benefits of Sensor Fusion:

- Complementary Data: Cameras excel at providing detailed visual data, such as texture, color, and shape, which LiDAR cannot capture. Conversely, LiDAR provides highly accurate depth data and performs well in low-light conditions, where cameras may fail.

- Improved Accuracy and Robustness: Sensor fusion allows robots to cross-check data from different sensors, improving the overall accuracy of object detection, navigation, and obstacle avoidance. For example, LiDAR might detect the presence of an object, while the camera identifies its shape and classification.

- Redundancy: Sensor fusion adds redundancy, ensuring that if one sensor fails or is compromised (e.g., in poor lighting conditions), the other sensor can continue to provide valuable data.

Applications of Sensor Fusion:

- Autonomous Vehicles: Autonomous vehicles often rely on a combination of LiDAR, cameras, and radar to navigate safely through various environments. The fusion of data from these sensors enables accurate mapping, object detection, and decision-making.

- Robotic Navigation in Unstructured Environments: Robots operating in complex or unstructured environments, such as warehouses or rescue missions, benefit from the combined strength of cameras and LiDAR. This enables them to detect obstacles, map their surroundings, and navigate safely.

- Industrial Automation: In industrial settings, sensor fusion enables robots to manipulate objects, assemble products, and inspect materials with high precision. The combination of cameras for object recognition and LiDAR for spatial awareness allows robots to perform complex tasks efficiently and safely.

4. Future Directions in Visual Sensing for Robotics

As technology continues to evolve, we can expect significant advancements in the field of visual sensing for robotics:

- Miniaturization: The development of smaller, more affordable LiDAR sensors and cameras will make it easier to integrate advanced visual sensing into a wide range of robotic systems.

- AI-Driven Perception: Artificial intelligence and machine learning algorithms will continue to enhance the capabilities of visual sensors, enabling robots to recognize objects, understand scenes, and make decisions based on complex visual input.

- Multi-Modal Perception: The fusion of visual sensors with other types of sensors (e.g., ultrasonic, infrared, radar) will lead to more robust and adaptable robots capable of operating in a wider range of environments.

Conclusion: Visionary Sensors for the Next Generation of Robotics

The integration of visual sensors such as cameras and LiDAR is playing a key role in the development of advanced robotic systems. These sensors provide critical data about the environment, enabling robots to perform a wide range of tasks from object recognition to autonomous navigation.

As sensor technologies continue to advance, and as sensor fusion becomes more sophisticated, robots will become increasingly autonomous, efficient, and adaptable. This will open up new possibilities in various industries, from autonomous vehicles to industrial automation, healthcare, and beyond. Ultimately, the future of robotics will be built on the synergy between advanced sensing technologies, powerful computational algorithms, and human-like perception.