Introduction

The advancement of robotics has led to the development of machines that can perform a broad range of tasks in various industries, from manufacturing to healthcare. In order to function effectively in dynamic and complex environments, robots must be equipped with technologies that allow them to perceive, interpret, and interact with their surroundings. One of the key breakthroughs enabling these capabilities is 3D modeling, a technique that allows robots to develop a detailed understanding of their workspace.

Through the use of 3D models, robots can not only map and understand their environment but also improve the accuracy of their actions, increasing their effectiveness in tasks that require high precision. This article explores how 3D modeling plays a crucial role in enhancing the performance of robots, particularly in complex tasks that require fine motor skills, decision-making, and adaptive responses to dynamic conditions.

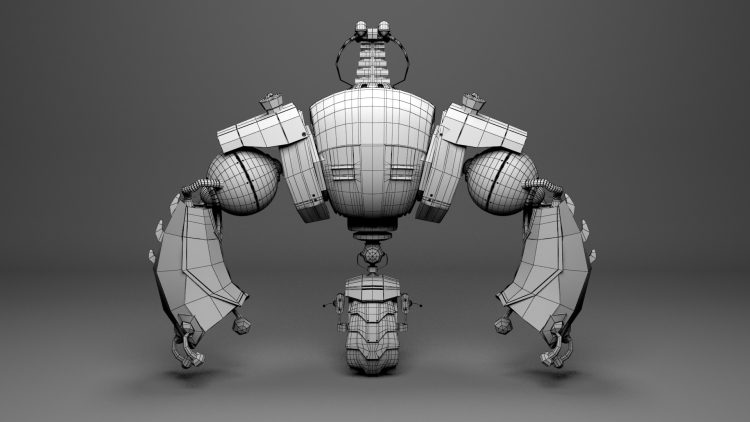

The Role of 3D Modeling in Robotics

At the heart of modern robotic systems is the ability to understand and interact with the world in three dimensions. Traditional 2D sensors, such as cameras or simple proximity sensors, have limited capabilities, especially when it comes to tasks requiring precise manipulation or navigation in cluttered environments. To overcome these limitations, robots rely on 3D modeling to create a digital representation of their workspace. This model allows robots to visualize the environment in three dimensions, enabling more accurate navigation, better task execution, and improved safety.

1. Understanding the Environment

The first step in any robotic operation is perception—understanding what is happening around the robot. 3D models provide robots with the information they need to perceive their environment in a way that mimics human spatial awareness. Unlike traditional 2D images, which offer limited depth perception, 3D models allow robots to analyze their surroundings in much greater detail. This ability is essential for tasks such as object detection, obstacle avoidance, and path planning.

The technology behind this process often involves a combination of LIDAR, stereo vision, and structured light scanning. These sensors generate dense point clouds or depth maps, which are then processed to create a comprehensive 3D model of the robot’s environment. Through this model, the robot can identify objects, navigate complex terrains, and even simulate potential interactions before making a physical move.

2. Improving Precision in Manipulation Tasks

One of the key areas where 3D modeling has a profound impact is in robot manipulation. In environments that require the robot to interact with objects—whether in manufacturing, healthcare, or even in domestic settings—accuracy is crucial. Without a deep understanding of the object’s location, size, and orientation in three-dimensional space, a robot would struggle to perform tasks like picking, assembling, or adjusting objects.

For instance, a robot equipped with 3D modeling capabilities can visualize an assembly line in a factory and determine the precise location of each component. By using the 3D model, the robot can calculate the optimal path for its arm or gripper to pick up an object, reducing the risk of errors and increasing throughput.

3. Navigation and Path Planning

The integration of 3D models in robot navigation enhances a robot’s ability to autonomously move through an environment while avoiding obstacles and optimizing its path. A robot equipped with a 3D model of its surroundings can plan its movements based on detailed knowledge of the space. This is particularly valuable in environments with intricate layouts, such as warehouses, construction sites, or even hospitals.

Path planning algorithms use 3D models to determine the most efficient route for the robot to follow, taking into account not only the obstacles but also variables such as floor height, surface type, and even potential hazards like slippery floors. In highly dynamic environments, where objects or people may be moving unexpectedly, 3D modeling allows the robot to adapt its path in real-time, adjusting its movements to avoid collisions and reach its target more efficiently.

4. Collaborative Robotics and Workspace Sharing

Another application of 3D modeling in robotics is in the field of collaborative robotics, or cobots. Cobots are designed to work alongside humans, assisting them in various tasks such as assembly, packaging, and even surgery. To collaborate effectively with humans, robots must have a clear understanding of both their environment and the people they are working with.

By using real-time 3D models, cobots can detect the presence and movement of humans, ensuring that they do not collide with people or other machines. These models also help the robot adjust its behavior based on the proximity of a human worker. For example, if a worker enters the robot’s workspace, the robot can slow down, change its path, or even pause its operation, preventing accidents or injuries.

The use of 3D spatial awareness also makes it easier for cobots to anticipate human actions. In collaborative environments, where tasks may require a high degree of synchronization between the human and the robot, 3D models help ensure that the robot’s actions align with the worker’s needs.

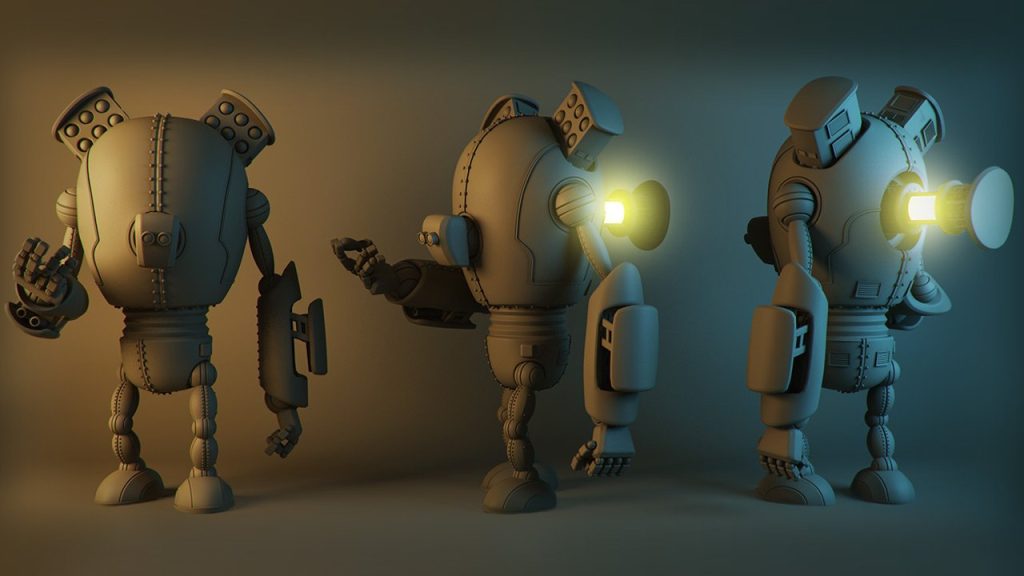

Technological Advancements in 3D Modeling for Robotics

1. Sensor Fusion

The creation of accurate 3D models requires data from various sensors, and sensor fusion is the process of combining information from these different sources to create a more complete and accurate model. A typical robot may use a combination of LIDAR, stereo cameras, depth sensors, and IMUs (Inertial Measurement Units) to collect environmental data.

LIDAR, for example, can provide precise depth information, while cameras can capture rich color and texture data. By combining these datasets, robots can produce 3D models that are not only spatially accurate but also rich in visual information, which is essential for tasks such as object recognition and interaction.

2. Machine Learning and Artificial Intelligence

The ability to create and use 3D models is not static. Machine learning (ML) algorithms play a crucial role in improving the robot’s ability to adapt to new environments and tasks. For example, a robot that frequently operates in a dynamic workspace, such as a factory floor, can use AI to continually update its 3D models, learning from past interactions and improving its spatial awareness over time.

Deep learning techniques, particularly those applied to computer vision, allow robots to recognize objects in 3D space and make real-time adjustments. By training on large datasets of labeled 3D images or simulations, robots can learn to interpret their environment with greater accuracy, adapting to new scenarios that may not have been encountered during their initial training phase.

3. Real-Time 3D Reconstruction

For robots to interact with their environment effectively, they need to update their 3D models in real-time. This capability, known as real-time 3D reconstruction, involves continuously collecting data from the robot’s sensors and updating the 3D model as the robot moves through space. This is particularly important in dynamic environments, where the robot’s perception of its surroundings must be constantly refined to account for changes in the workspace, such as moving obstacles or the introduction of new objects.

Real-time 3D reconstruction allows robots to perform tasks like autonomous mapping, where the robot builds a map of an unfamiliar environment as it explores. This technique is essential in applications such as autonomous exploration, search-and-rescue missions, and warehouse automation.

Challenges and Future Directions

While 3D modeling has revolutionized the capabilities of robots, there are still challenges to overcome.

1. Computational Complexity

Generating and updating 3D models in real-time can be computationally expensive, especially for robots operating in large or highly dynamic environments. The process of collecting data, processing it, and integrating it into a 3D model requires significant computational resources. As robots become more complex and handle larger datasets, optimizing these processes will be crucial for efficient performance.

2. Accuracy and Precision

In tasks that require fine manipulation, such as surgery or assembling delicate components, the accuracy of 3D models is paramount. Small errors in depth perception or object placement can lead to significant operational failures. Advances in sensor technology and algorithms are continuously improving the precision of 3D modeling, but maintaining high levels of accuracy remains a challenge, particularly in cluttered or chaotic environments.

3. Integration with Other Technologies

As robotics continues to advance, integrating 3D modeling with other cutting-edge technologies, such as augmented reality (AR) and virtual reality (VR), will open up new possibilities for both industrial and consumer applications. These technologies can provide humans with a more intuitive way to interact with robots, allowing them to visualize 3D models in real-time and assist robots in their tasks.

Conclusion

The integration of 3D modeling into robotics has significantly enhanced the precision, autonomy, and versatility of robots across a wide range of applications. By enabling robots to perceive, interpret, and interact with their environments in three dimensions, 3D models are instrumental in improving the performance of robots in complex, dynamic tasks. As advancements in sensor technology, machine learning, and AI continue to drive innovation, the role of 3D modeling in robotics will only become more critical, paving the way for a future where robots are not only more capable but also more intelligent and adaptable in real-world environments.