Introduction

The integration of vision systems and artificial intelligence (AI) in industrial robots has ushered in a new era of automation, where robots are capable of performing tasks with increasing levels of autonomy. One of the most groundbreaking developments in this space is the ability of robots to visually recognize tools used by human workers and, based on that recognition, plan and adapt their operations accordingly. This capacity for visual recognition and workflow adaptation opens up a myriad of possibilities in industrial settings, where robots can work alongside humans, performing tasks with precision, efficiency, and flexibility.

In industrial environments, tools play a critical role in ensuring the quality and efficiency of tasks. However, the ability for a robot to recognize tools, understand their intended use, and adapt its actions in real-time based on workflow is a more sophisticated challenge. It requires advanced computer vision algorithms, machine learning models, and context-aware planning systems. When robots can recognize tools and work with humans seamlessly, it not only increases productivity but also improves safety, reduces human error, and enhances the overall work experience.

This article explores how robots use visual recognition to identify tools and adapt their operations based on human actions, focusing on the underlying technologies, their real-world applications, and the future potential of such systems in industrial settings.

1. The Role of Visual Recognition in Industrial Robotics

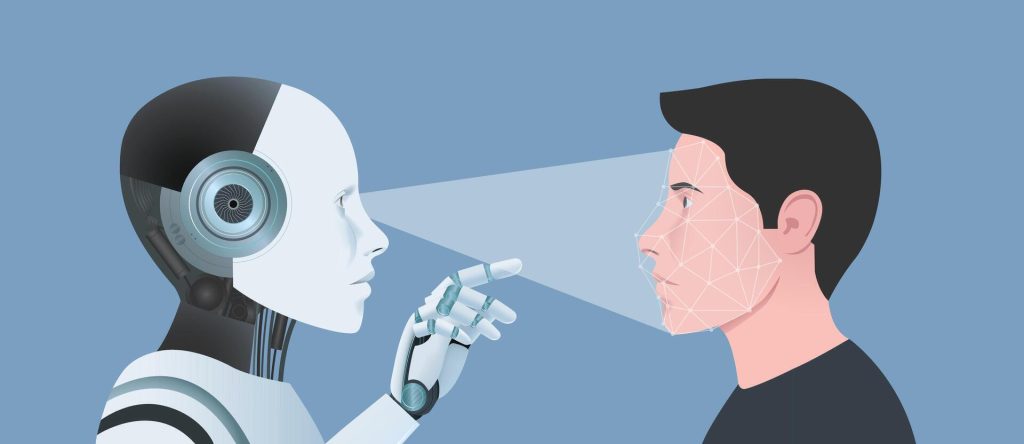

Visual recognition is the ability of a robot to interpret and understand images captured by cameras or other visual sensors. The integration of visual recognition into robotic systems enables robots to perceive the environment in a way that mimics human visual understanding, allowing them to interact intelligently with objects, tools, and people.

Key Components of Visual Recognition Systems in Robotics

Visual recognition systems consist of several key components that work in concert to allow robots to understand their surroundings:

- Cameras and Visual Sensors: Cameras, depth sensors, and LIDAR systems capture images or 3D data of the environment. These sensors provide the raw data that the robot will process.

- Preprocessing Algorithms: Before the data can be analyzed, it often needs to be cleaned and processed to remove noise and correct for distortions or lighting changes.

- Feature Extraction and Object Detection: Using convolutional neural networks (CNNs) or other machine learning models, the robot extracts relevant features from images to identify objects, such as tools.

- Contextual Understanding: Beyond just recognizing tools, robots need to understand the context in which these tools are being used. This involves understanding the relationship between tools, tasks, and workflow within the industrial process.

For instance, in an assembly line setting, a robot might be equipped with a camera and an AI algorithm that can recognize a wrench, understand its intended use (tightening bolts), and automatically adjust its movements to assist a human worker in performing the task.

2. Tool Identification: The First Step in Workflow Planning

The ability to visually recognize tools is one of the foundational capabilities for robots working in industrial environments. In many cases, a human worker’s actions with tools serve as a guide for the robot, which needs to interpret these actions and respond accordingly.

Challenges in Tool Identification

The recognition of tools by robots is not as simple as distinguishing between a wrench and a hammer. Industrial tools often come in various shapes, sizes, and configurations, and can be used in a wide range of contexts. Therefore, robots need to identify tools not just based on their shape, but also based on the context in which they are used.

- Shape and Size Variability: Tools may come in different forms and designs, making it difficult to recognize them based on appearance alone. A wrench might differ in size or shape depending on the specific task or manufacturer.

- Environmental Variability: Tools may be used in different environments or under varying conditions. For example, a tool may be covered in grease or dirt, which could affect its appearance to a visual recognition system.

- Real-Time Adaptability: The robot must recognize tools in real time as they are handled by the worker, which requires processing images quickly and efficiently.

Technologies for Tool Recognition

- Deep Learning and CNNs: Convolutional neural networks (CNNs) are particularly effective at recognizing objects in images, even when there is variability in the tool’s appearance. By training CNNs on large datasets of labeled images, robots can learn to recognize and classify tools with high accuracy.

- 3D Vision Systems: Depth cameras, LIDAR, and stereoscopic vision systems enable robots to recognize objects not just in 2D, but in three-dimensional space. This allows robots to understand the shape, orientation, and size of tools, improving recognition in complex environments.

- Multi-Sensor Fusion: Combining data from multiple sensors, such as cameras, force sensors, and proximity sensors, enhances the robot’s ability to recognize tools accurately. Multi-sensor fusion is especially valuable when tools are partially obscured or when lighting conditions are poor.

Once the robot successfully identifies a tool, the next step is determining how to incorporate it into the workflow, which leads us to the concept of workflow planning.

3. Workflow Planning: Adapting Robot Operations Based on Tools

Workflow planning is a critical component of a robot’s ability to operate autonomously in an industrial setting. Once a robot has recognized the tool in the worker’s hand, it must plan its actions to align with the broader task or process being carried out. This involves adapting its movements, positioning, and timing to complement the human worker and contribute to the overall workflow.

Understanding Workflow in Industrial Settings

In industrial environments, workflows typically consist of a series of steps or tasks that must be completed in a specific sequence. These tasks might include assembly, inspection, testing, or packaging. Each task may require a different set of tools, materials, and movements.

Robots need to:

- Understand Task Context: The robot must interpret what the worker is doing with the tool and understand the task at hand. For example, if the worker is using a drill, the robot must recognize whether it is performing a drilling or a fastening operation.

- Adapt to Dynamic Changes: Industrial workflows are rarely static. A worker might switch tools or adjust the task in real time, and the robot must be able to respond to these changes by adapting its actions.

- Coordinate with Human Workers: The robot must also plan its actions to avoid interfering with human workers. This requires real-time synchronization between human and robotic movements.

Techniques for Workflow Planning

- Reinforcement Learning: In some applications, robots use reinforcement learning (RL) to improve their ability to adapt to complex workflows. In RL, the robot learns by interacting with its environment, receiving feedback on its actions, and adjusting its behavior over time. This is especially useful in dynamic environments where predefined scripts might not suffice.

- Task-Specific Motion Planning: Using algorithms such as Rapidly-exploring Random Trees (RRT) or A search*, robots can plan specific trajectories to avoid obstacles while performing tasks like assembly or welding. These algorithms enable the robot to navigate around the workspace and adjust its motions based on the task at hand.

- Human-Robot Collaboration Models: To ensure that robots work seamlessly with human workers, collaborative robot (cobot) systems are increasingly being employed. Cobots are designed to work alongside humans, adjusting their actions based on real-time interactions. These robots typically use sensors to detect human presence and avoid collisions, while synchronizing their movements with the worker’s actions.

4. Case Studies and Applications of Tool Recognition and Workflow Planning

Several industries have successfully implemented robots that recognize tools and adjust their operations based on human workflow. Below are a few key examples where these technologies are making a significant impact.

1. Collaborative Robotics in Manufacturing

In manufacturing environments, particularly in the automotive industry, robots equipped with visual recognition systems are assisting human workers in performing tasks like assembly and quality inspection. These robots are often equipped with cameras and AI algorithms that allow them to recognize tools, such as wrenches, screwdrivers, and drills.

For instance, a robot might observe a worker using a screwdriver to tighten bolts on a car chassis. Upon recognizing the tool, the robot might automatically retrieve the next part, position it for the worker, and then prepare to assist in further steps, like tightening additional bolts or performing quality checks. This reduces the time the worker spends moving between tasks and allows the robot to seamlessly integrate into the workflow.

2. Robotics in Surgical Environments

In surgery, precision is paramount, and robotic systems are increasingly being used to assist surgeons with various procedures. Surgical robots, such as the da Vinci Surgical System, use visual recognition to identify surgical tools and adjust their actions based on the surgeon’s workflow. For example, the robot might recognize when a scalpel is being used and adapt its movements to provide the necessary assistance without interrupting the surgeon’s actions.

This level of automation not only improves the efficiency of surgeries but also reduces human error, increases precision, and allows for minimally invasive procedures.

3. Warehouse and Logistics Robotics

In warehouses, robots equipped with vision systems are used to handle and organize items. These robots recognize tools or items that need to be moved, packed, or sorted, and adapt their actions accordingly. For instance, when robots identify tools or materials in a worker’s hand, they adjust their movements to assist in loading, unloading, or inventorying products.

These systems are often integrated with advanced workflow management software that ensures the robots’ actions are synchronized with the broader operational processes in the warehouse.

5. The Future of Tool Recognition and Workflow Planning

The potential for tool recognition and workflow planning in robots is vast, and as technology continues to advance, we can expect even more seamless and sophisticated interactions between robots and human workers. In the future, we may see:

- Improved AI and Machine Learning Algorithms: As AI models become more advanced, robots will be able to recognize a broader range of tools, adapt to more complex workflows, and make autonomous decisions based on real-time data.

- Smarter Collaboration: The evolution of collaborative robots (cobots) will allow robots to work alongside humans with even greater efficiency, taking over repetitive or dangerous tasks and leaving humans to focus on more complex decision-making.

- Adaptive Manufacturing Systems: The future of manufacturing will likely see the rise of fully adaptive production lines, where robots can switch tasks, tools, and roles in response to real-time changes in the production process.

Conclusion

The ability of robots to recognize tools and adapt their actions according to human workflow is revolutionizing industries from manufacturing to healthcare. By combining cutting-edge visual recognition technology with advanced workflow planning systems, robots are becoming more intelligent, flexible, and capable of working alongside humans in highly dynamic environments. As these technologies continue to improve, we can expect robots to play an even greater role in optimizing industrial processes, improving productivity, and ensuring safer working conditions for humans. The future of industrial robotics is one of deeper collaboration, increased efficiency, and more intelligent systems that augment human capabilities across a wide range of applications.