Introduction

In recent years, computer vision has become a crucial component in various industries such as autonomous vehicles, robotics, surveillance, and industrial automation. The ability for machines to perceive and interpret visual data is unlocking countless new possibilities in terms of functionality, safety, and efficiency. However, as the demand for real-time processing of visual data continues to grow, traditional cloud computing approaches are proving inadequate for applications that require low latency, high-speed decision-making, and offline operation.

This is where edge computing steps in as a transformative technology. Edge computing involves processing data closer to the source of data generation—in this case, at the “edge” of the network (on the device itself, or at a nearby localized data center)—rather than sending it to distant cloud servers. By reducing the need for data transmission and enabling faster processing, edge computing significantly enhances the performance of computer vision systems in real-time applications.

In this article, we will explore how edge computing is becoming a key enabler of real-time computer vision applications, its underlying technologies, the benefits it provides, and the challenges that come with integrating edge computing into computer vision systems. We will also examine a variety of practical applications where this combination of technologies is already making a significant impact.

The Need for Real-Time Processing in Computer Vision

1. Challenges of Real-Time Vision Systems

Computer vision systems have evolved to handle increasingly complex tasks, such as object detection, facial recognition, scene analysis, and gesture recognition. These systems are powered by machine learning models, specifically deep learning and convolutional neural networks (CNNs), which require extensive computation to process high-resolution images and video streams. However, in many applications, real-time performance is not a luxury—it is a necessity. Some of the core challenges in real-time vision include:

- Latency: The time it takes for an image to be processed and for a decision to be made must be minimal. Any delay can compromise the functionality of systems such as autonomous vehicles or industrial robots, where immediate responses are critical.

- Bandwidth and Connectivity: Transmitting large amounts of visual data from cameras to remote cloud servers for processing can create substantial delays and increase the network load, especially in environments with limited or unreliable connectivity (e.g., remote locations, factories with poor network infrastructure).

- Energy Efficiency: In applications like drone vision or wearable devices, energy consumption is a key constraint. Cloud-based processing consumes considerable power due to the need for constant communication with remote servers.

2. Real-Time Applications of Computer Vision

Several industries rely heavily on real-time computer vision, where milliseconds matter. Examples of such applications include:

- Autonomous Vehicles: Self-driving cars rely on computer vision to navigate safely by recognizing road signs, pedestrians, obstacles, and lane markings in real time. Any delay in processing the visual data could result in a dangerous situation.

- Manufacturing and Robotics: Automated systems in manufacturing and robotics must make quick decisions based on visual input to perform tasks such as assembly, quality inspection, or picking and placing objects.

- Healthcare and Medical Imaging: Real-time analysis of medical images, such as in robotic-assisted surgeries, can improve precision and decision-making, potentially saving lives.

- Surveillance and Security: Real-time facial recognition, activity monitoring, and anomaly detection are essential in surveillance systems used in airports, public spaces, and private security.

What is Edge Computing?

1. Definition of Edge Computing

Edge computing is a distributed computing model in which data processing and analysis occur closer to the data source rather than being sent to centralized cloud data centers. In the context of computer vision, this means that visual data from cameras and sensors are processed on local devices or edge servers, minimizing the need for large data transfers and reducing latency.

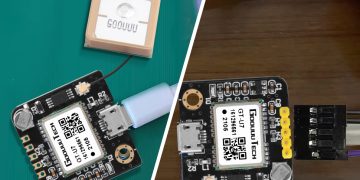

Edge devices in a computing network might include smart cameras, autonomous vehicles, drones, or industrial machines, which have sufficient computing resources to handle data processing. These devices often run artificial intelligence (AI) models or leverage hardware accelerators such as graphics processing units (GPUs), tensor processing units (TPUs), or specialized FPGAs (Field-Programmable Gate Arrays) to accelerate image and video processing.

2. How Edge Computing Improves Real-Time Vision

Edge computing brings several benefits to computer vision systems, especially in real-time applications. Here’s how it addresses the key challenges mentioned earlier:

- Reduced Latency: By processing data locally, edge devices can make decisions in real-time, without the need to send data over the network. This significantly reduces the time between capturing an image and making a decision based on that image.

- Bandwidth Efficiency: With edge computing, only critical data or processed insights (rather than raw image data) need to be sent to the cloud or other centralized servers. This reduces network congestion and makes the system more efficient.

- Offline Operation: Many edge devices can operate independently of network connectivity, allowing them to function in remote or unreliable network environments, such as mining sites, factories, or even in space.

- Energy Efficiency: By reducing the amount of data sent to the cloud, edge computing minimizes the energy consumption of the system. It also allows for optimized resource allocation to process only the necessary data, extending the life of mobile and remote devices.

Key Technologies Enabling Edge Computing in Computer Vision

Several advancements in hardware and software are driving the widespread adoption of edge computing for real-time computer vision applications.

1. Artificial Intelligence on the Edge

Edge devices now support powerful AI and machine learning algorithms, enabling real-time object recognition, scene segmentation, activity recognition, and more, directly on the device. AI models, such as deep learning networks, are being optimized for edge computing environments. AI-powered edge devices are capable of:

- Inference Processing: With pre-trained models, edge devices can process images, detect objects, and make decisions without needing to send data to the cloud for further analysis.

- Model Compression and Optimization: AI models are being optimized for edge deployment using techniques such as model quantization, pruning, and knowledge distillation. This allows more complex models to run efficiently on edge hardware with limited resources.

2. Hardware Accelerators

To meet the processing demands of real-time computer vision, edge devices are increasingly equipped with hardware accelerators that accelerate AI computations. These include:

- GPUs: Graphics Processing Units are commonly used in high-performance computing and are now being deployed in edge devices to accelerate image processing tasks.

- TPUs: Tensor Processing Units, designed specifically for AI tasks, offer significant performance improvements for real-time deep learning applications.

- FPGAs: Field-Programmable Gate Arrays are customizable circuits that can be tailored to specific computer vision tasks, providing high-speed processing while consuming less power than traditional processors.

- ASICs: Application-Specific Integrated Circuits are specialized chips designed for specific use cases, including real-time vision tasks.

3. 5G Connectivity

The rollout of 5G technology is expected to further enhance the capabilities of edge computing. With its low latency, high throughput, and high reliability, 5G networks will enable faster communication between edge devices and cloud servers when necessary. In real-time computer vision applications, 5G could help synchronize data from multiple edge devices or provide cloud backup for mission-critical data.

Applications of Edge Computing in Real-Time Computer Vision

1. Autonomous Vehicles

Autonomous vehicles rely heavily on real-time computer vision to navigate, detect obstacles, and make decisions on the road. Edge computing allows these vehicles to process data from multiple cameras, LiDAR sensors, and radar systems locally, providing instant feedback for driving decisions. This minimizes latency, enabling the vehicle to react quickly to changes in its environment, such as sudden stops, pedestrians, or road hazards.

2. Industrial Automation

In industrial settings, computer vision is used for quality control, object tracking, and predictive maintenance. Edge-enabled robots and vision systems can inspect products on production lines, detect defects, and make adjustments in real-time without relying on cloud servers. This enhances efficiency, reduces downtime, and enables quicker decision-making.

3. Healthcare and Medical Imaging

In medical environments, such as robotic surgeries or diagnostic imaging, real-time image processing is essential for the accuracy and safety of procedures. Edge computing allows real-time processing of medical images, such as X-rays or CT scans, directly on the medical device, reducing the time between image capture and diagnosis, which can be critical in emergency situations.

4. Surveillance and Security

Edge computing is increasingly used in smart surveillance systems to monitor public spaces, buildings, and private properties. By processing video feeds locally, edge devices can perform real-time facial recognition, activity monitoring, and anomaly detection, enabling security personnel to respond instantly to potential threats.

5. Smart Cities

Smart cities leverage edge computing to process data from a variety of sensors, such as traffic cameras, public safety cameras, and environmental sensors. Real-time analysis of traffic patterns, for example, can help optimize traffic flow, reduce congestion, and enhance public safety.

Challenges and Future Directions

While edge computing provides significant benefits for real-time computer vision, it also presents several challenges that need to be addressed:

1. Limited Resources

Edge devices typically have limited computational power, memory, and storage compared to cloud servers. Therefore, optimizing AI models for edge deployment is crucial to ensuring high performance without overloading the system.

2. Security and Privacy

Edge devices often process sensitive data, such as images from security cameras or medical imaging. Ensuring that this data is processed securely, and that privacy is maintained, is paramount.

3. Interoperability

With the increasing use of multiple edge devices in complex systems, ensuring that different devices can communicate and share data effectively is essential for the success of edge computing in large-scale computer vision applications.

Conclusion

As real-time computer vision applications continue to expand, edge computing has emerged as a critical technology for enabling efficient, low-latency, and secure visual data processing. By shifting computation closer to the source of data generation, edge computing enhances the capabilities of vision systems in autonomous vehicles, industrial automation, healthcare, and security. Despite challenges, the continued advancements in AI, hardware accelerators, and 5G connectivity are poised to make edge computing an even more integral part of the future of computer vision.