Introduction

The evolution of robotics has been marked by significant strides in object manipulation. At the heart of many robotic tasks lies the challenge of object grasping, where robots must interact with objects of diverse shapes, sizes, and materials. Traditional robots, often limited by pre-programmed, rigid algorithms, struggled with the flexibility required to handle objects in dynamic environments. However, with the advent of machine learning (ML), artificial intelligence (AI), and advanced sensor technologies, robots can now engage in iterative processes that optimize their grasping strategies. By learning from each trial, robots can enhance their precision and ability to adapt to various objects, ultimately leading to more effective object manipulation.

In this article, we will delve into how robots optimize their strategies for grasping objects through repeated trials and feedback mechanisms. The discussion will cover the underlying technologies, the role of AI and machine learning, the applications across different industries, and the challenges that come with these advancements.

1. The Complexity of Object Grasping in Robotics

1.1 Grasping: A Fundamental Challenge in Robotics

Grasping objects is more complex than it may initially seem. The task requires precise coordination between a robot’s sensors, actuators, and algorithms to successfully pick up objects with varying shapes, textures, and properties. The key challenges in robotic grasping include:

- Object Shape and Orientation: Objects can have complex geometries that make it difficult to define an ideal grasping point. Unlike simpler shapes, irregular objects require advanced algorithms to determine how best to grip them.

- Surface Properties: Objects can be slippery, sticky, soft, or rigid. Each material requires different force and friction management strategies to prevent slipping or damage.

- Environmental Factors: Variations in lighting, obstacles, or the surrounding environment can impact a robot’s ability to perceive the object and determine the correct grasping strategy.

In industrial applications, the ability to handle a wide variety of objects with different characteristics is crucial. In environments like warehouses, manufacturing plants, or healthcare settings, robots need to learn to perform precise operations with diverse objects under variable conditions.

2. Iterative Learning in Robotic Grasping

2.1 The Role of Reinforcement Learning (RL)

At the heart of iterative optimization in robotic grasping lies reinforcement learning (RL). RL is a type of machine learning in which an agent (in this case, the robot) learns to make decisions by interacting with its environment. Through trial and error, the robot evaluates its actions and refines them over time based on feedback.

- Exploration and Exploitation: During the early stages, the robot explores different strategies for grasping, trying out various approaches to pick up an object. Over time, it exploits the strategies that result in successful grasps.

- Reward Systems: In RL, a robot receives a positive reward when it successfully grasps an object or completes a task accurately. On the other hand, negative outcomes such as dropping the object or applying excessive force result in a penalty. Through this system, robots gradually improve their ability to perform grasping tasks.

- Policy Optimization: As the robot continues to perform actions, the algorithms optimize the policy—the decision-making framework that dictates how the robot should act in a given situation. This allows the robot to improve its grasping strategy iteratively.

RL enables robots to learn from mistakes and continuously improve, adapting to new challenges and environments.

2.2 The Power of Simulation in Grasping Optimization

A major advantage of machine learning in robotics is the ability to simulate a vast number of interactions in a virtual environment before implementing the learned strategies in the real world.

- Simulated Training: Tools like Gazebo, MuJoCo, and V-REP allow robots to perform countless grasping trials in a simulated environment. These simulations enable robots to experiment with different grasping techniques on a wide variety of virtual objects, including those with complex shapes and textures. This approach helps robots build up knowledge without the constraints of real-world physical testing.

- Data Generation: Simulated environments generate large datasets of successful and unsuccessful grasps, which can be used to refine the robot’s machine learning models. These datasets are used to train the algorithms that guide the robot’s real-world behavior.

- Real-World Transfer: Once a robot’s grasping strategy is optimized in a simulated environment, it can be transferred to the physical world. By using transfer learning techniques, robots can fine-tune their models to adapt to real-world conditions, such as lighting or unexpected object deformations.

3. Advanced AI Techniques in Robotic Grasping

3.1 Deep Learning for Object Recognition

For robots to accurately grasp objects, they first need to identify and understand them. Deep learning plays a crucial role in this process. By using convolutional neural networks (CNNs) and other advanced neural architectures, robots can process visual data and make decisions based on object characteristics.

- Object Detection: Robots use camera systems to detect and recognize objects in their environment. With deep learning, they can identify the shape, orientation, and material of objects, which informs the optimal grasping approach.

- 3D Object Modeling: In some cases, robots use LiDAR or stereo cameras to create 3D models of objects, which provide more detailed information about the object’s spatial properties. These models are crucial for understanding the object’s geometry and ensuring an effective grasp.

- Grasp Pose Estimation: Once the object is recognized, deep learning algorithms estimate the best grasp pose, i.e., the optimal way for the robot to approach and grip the object. This pose is based on the robot’s knowledge of the object’s shape, weight distribution, and the task it needs to accomplish.

3.2 Multi-Modal Learning for Grasping

Robots can improve their grasping abilities by integrating multiple data sources and learning from them simultaneously. This is known as multi-modal learning.

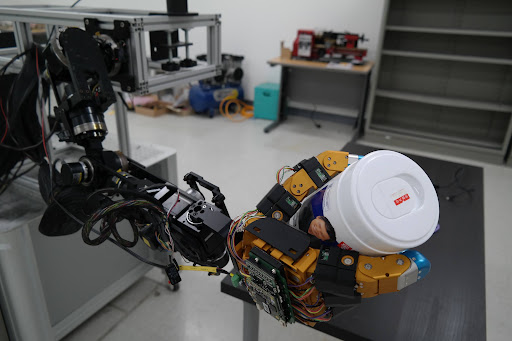

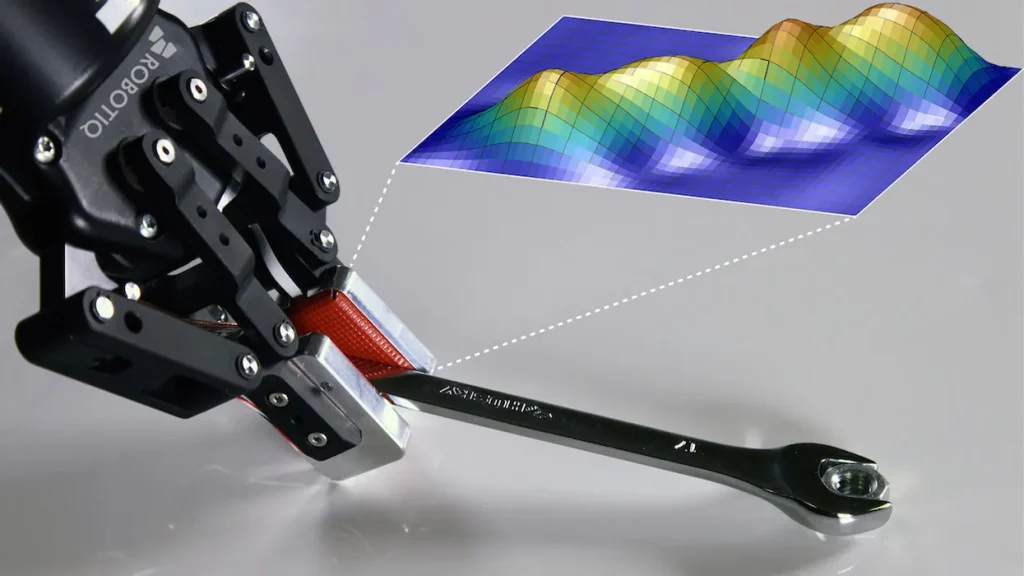

- Vision and Tactile Feedback: By combining visual data with tactile feedback from sensors such as force-sensitive resistors (FSRs) and touch sensors, robots can refine their grasping strategies in real time. This integration allows the robot to detect if it has successfully gripped an object and adjust its forces to avoid dropping it or causing damage.

- Force and Torque Sensing: Advanced robotic hands are equipped with force and torque sensors that help the robot apply the right amount of force when handling an object. If the robot applies too much force, it can crush the object; if it applies too little, the object may slip. Feedback from these sensors allows the robot to adjust its grip and ensure a stable hold.

- Adaptive Grasping: Multi-modal learning enables robots to adapt to changing conditions. For instance, when handling soft or fragile items, robots can adjust their grasp to ensure the object is held gently, preventing deformation.

4. Real-World Applications of Optimized Grasping Strategies

4.1 Robotics in Manufacturing and Logistics

The manufacturing and logistics industries have widely adopted robots for their ability to perform repetitive tasks with precision. Optimized grasping strategies are key to enhancing robot performance in these sectors.

- Automated Picking: Robots in warehouses, such as Amazon Robotics, use advanced grasping strategies to pick up items of varying sizes and weights. By iterating on their approaches through machine learning, these robots can pick up objects more efficiently, even in cluttered or dynamic environments.

- Collaborative Robots (Cobots): In modern manufacturing, cobots work alongside human workers to assist with tasks like assembly, packing, and sorting. As cobots learn to optimize their grasping techniques through feedback and reinforcement, they become more effective collaborators, improving both safety and productivity.

4.2 Healthcare and Medical Robotics

In healthcare, robots are increasingly used for tasks that require high precision, such as surgery, rehabilitation, and caregiving. Optimized grasping strategies are critical in these applications to ensure both accuracy and patient safety.

- Surgical Robots: Da Vinci Surgical Systems and similar robots rely on optimized grasping algorithms to manipulate surgical instruments with pinpoint precision. Through continuous learning, these robots can improve their ability to assist surgeons, reducing the risk of errors and improving outcomes.

- Robotic Prosthetics: Prosthetic limbs equipped with AI and learning algorithms can adapt to the movements and needs of the user. Through iterative training, these systems can learn to improve their grasp on different objects, mimicking natural human movements.

4.3 Domestic Robotics and Assistance

At home, robots can assist with a variety of tasks, including cleaning, organizing, and caregiving. Optimized grasping allows them to perform these tasks effectively, even in unpredictable environments.

- Robotic Vacuum Cleaners: Advanced robotic vacuum cleaners, like the Roomba, use learned strategies to navigate around furniture, avoid obstacles, and even pick up small objects, such as toys or cables, that may impede cleaning.

- Personal Assistants: Robots designed to assist with household tasks, such as assistive robots for the elderly, must be able to grasp objects of varying shapes and materials. Optimizing grasping strategies is crucial for these robots to carry out tasks like serving meals, picking up objects, or providing support for mobility.

4.4 Agriculture and Food Handling

The agricultural industry is increasingly adopting robots to perform tasks such as harvesting, sorting, and packing. These robots must learn to grasp and manipulate various crops and food products.

- Fruit Picking: Robots in agriculture, like FFRobots, learn to identify ripe fruit and pick it without damaging the crop. By iterating on their grasping strategies, these robots improve their efficiency and reduce waste.

- Food Sorting: Robots in food processing plants use optimized grasping strategies to pick up and sort food products, ensuring they are handled gently while improving overall production efficiency.

5. Challenges and Future Directions

5.1 Environmental Variability and Uncertainty

Despite significant advances, robots still face challenges in dynamic, unpredictable environments. Variations in lighting, object placement, and physical properties require robots to continually adapt their grasping strategies.

5.2 Robotic Dexterity and Sensitivity

While robots are becoming increasingly adept at grasping, the level of dexterity required for certain tasks—such as handling delicate or irregular objects—still presents a challenge. Further developments in robotic hands and sensor technology will be key to overcoming these limitations.

Conclusion

Optimizing object grasping strategies through iterative trials and feedback mechanisms has become a cornerstone of modern robotic manipulation. By leveraging advanced AI, machine learning, and sensor technologies, robots can learn to handle a wide variety of objects with precision and adaptability. As these technologies continue to improve, robots will become even more integrated into industries ranging from healthcare to logistics, transforming the way we interact with machines and revolutionizing the future of automation. The road ahead is filled with opportunities for intelligent, flexible, and efficient robots capable of performing increasingly complex tasks across diverse environments.