Introduction

The continuous evolution of robotics has spurred significant advancements in various domains, such as autonomous driving, industrial automation, healthcare, and space exploration. At the heart of these developments lies the critical ability of robots to perceive and interpret their environment. Traditionally, robotic perception systems have relied on individual sensors like cameras, LiDAR, or ultrasonic sensors to gather environmental data. However, these sensors often have limitations when used in isolation, such as poor performance in low light conditions, range inaccuracies, and susceptibility to interference from environmental factors.

To overcome these challenges and improve the accuracy and robustness of robotic systems, multi-sensory fusion has emerged as a promising solution. By integrating data from various sensors, robots can achieve a more comprehensive and accurate understanding of their surroundings. This article explores the concept of multi-sensory fusion, its importance in robotic perception, and the technological advancements that are driving this innovation forward.

1. Understanding Multi-sensory Fusion in Robotics

Multi-sensory fusion, also known as sensor fusion, is the process of combining data from multiple sensors to create a more accurate and holistic representation of an environment. In robotics, this concept is applied to enhance perception systems, enabling robots to make more informed decisions and respond to dynamic changes in their surroundings.

The core advantage of sensor fusion lies in the complementary nature of different sensor types. Each sensor has its strengths and weaknesses, and by integrating their data, robots can compensate for individual sensor shortcomings. For example, a camera might struggle in low-light conditions, but an infrared sensor can provide valuable data under those circumstances. By merging the information, the robot can generate a clearer and more reliable understanding of the environment.

2. Types of Sensors Used in Robotic Perception

Robots are equipped with a wide array of sensors, each designed to capture specific types of information. These sensors can be broadly categorized into several types based on their functionality and the type of data they provide.

- Vision Sensors (Cameras): Cameras, both monocular and stereo, are widely used for visual perception. They provide rich, high-resolution images that allow robots to recognize objects, navigate, and interact with their surroundings. However, they are limited by lighting conditions and depth perception.

- LiDAR (Light Detection and Ranging): LiDAR sensors measure distances by bouncing laser beams off objects and calculating the time it takes for the light to return. LiDAR is highly effective for creating detailed 3D maps of environments and is less susceptible to lighting changes, unlike cameras. However, it can be expensive and may struggle with transparent or reflective surfaces.

- Ultrasonic Sensors: Ultrasonic sensors are commonly used for proximity sensing. They emit sound waves and measure the time taken for the sound to reflect back from objects. While they are inexpensive and robust, they provide lower resolution compared to LiDAR or cameras and are generally limited to short-range measurements.

- Radar (Radio Detection and Ranging): Radar sensors use radio waves to detect objects and measure their speed and distance. They are highly effective in adverse weather conditions, such as fog, rain, or snow, where other sensors might fail.

- Infrared Sensors: Infrared sensors detect heat signatures and are often used for night vision or to identify living beings. While they offer low-resolution data, they are highly useful in specific applications, such as search-and-rescue missions.

- IMU (Inertial Measurement Units): IMUs provide information about the robot’s movement, such as acceleration and angular velocity. They are critical for maintaining balance and stability in mobile robots, particularly in dynamic environments.

3. The Role of Multi-sensory Fusion in Enhancing Perception

In complex environments, relying on a single sensor can lead to incomplete or inaccurate data. Multi-sensory fusion combines the strengths of different sensors to create a more accurate and robust perception system. The fusion process can be broken down into three main stages:

- Data Acquisition: Multiple sensors simultaneously collect data from the environment. The information gathered may vary in terms of format, quality, and accuracy. For instance, a camera might provide detailed visual data, while a LiDAR sensor offers precise distance measurements.

- Data Alignment: The data from different sensors must be aligned to a common reference frame. This stage involves spatially and temporally synchronizing the sensor data so that they can be fused correctly. For example, if a camera and LiDAR sensor are mounted on a robot, the sensor data must be aligned in terms of position and time to ensure that the robot’s perception system can combine them effectively.

- Data Fusion: Once the data is aligned, it is processed to generate a unified perception model. This model integrates the different sensory inputs, extracting key features and resolving ambiguities. Techniques such as Kalman filters, particle filters, and deep learning models are commonly used for this purpose.

4. Techniques for Multi-sensory Fusion

Several algorithms and methods have been developed to effectively fuse multi-sensory data in robotics. These techniques vary in complexity and application, depending on the nature of the sensors and the desired outcomes.

- Kalman Filter: The Kalman filter is one of the most widely used techniques for sensor fusion in robotics. It works by predicting the state of the robot based on previous sensor measurements and then updating this prediction using the new sensor data. The Kalman filter is particularly effective for integrating data from sensors with noisy measurements, such as IMUs or GPS.

- Particle Filter: The particle filter, also known as Monte Carlo localization, is another technique used for sensor fusion in robotics. It works by representing the robot’s state as a set of particles, each representing a possible configuration of the robot. The filter uses sensor measurements to update the likelihood of each particle, allowing the robot to estimate its position and environment.

- Deep Learning for Sensor Fusion: In recent years, deep learning techniques have been applied to sensor fusion, particularly for tasks such as object detection, semantic segmentation, and autonomous navigation. Convolutional neural networks (CNNs) and recurrent neural networks (RNNs) can be trained to combine and process data from multiple sensors, enabling robots to learn to perceive their environments with high accuracy.

- Simultaneous Localization and Mapping (SLAM): SLAM algorithms are fundamental for autonomous robots navigating unknown environments. By combining data from various sensors, SLAM enables robots to build maps of their surroundings and localize themselves within those maps. Modern SLAM systems often rely on a combination of LiDAR, cameras, and IMUs for enhanced robustness.

5. Challenges in Multi-sensory Fusion

While sensor fusion offers substantial improvements in robotic perception, it also introduces several challenges:

- Sensor Calibration: Accurate sensor calibration is crucial for successful fusion. Misalignment or inaccuracies in sensor calibration can lead to poor fusion results, affecting the robot’s ability to interpret its environment correctly.

- Real-time Processing: The fusion process requires significant computational resources, especially when dealing with high-dimensional data from multiple sensors. For robots to function autonomously in real time, fusion algorithms must be optimized for speed and efficiency.

- Sensor Reliability: Each sensor type has its own limitations. For example, cameras may struggle in low-light conditions, while LiDAR might face issues with reflective surfaces. Ensuring that the fusion system can handle sensor failures or low-quality data is a significant challenge.

- Dynamic Environments: Robots often operate in dynamic environments where objects and conditions change rapidly. Maintaining an accurate and up-to-date fusion model in such environments is a complex task, as sensor data can quickly become outdated or inconsistent.

6. Applications of Multi-sensory Fusion in Robotics

The use of multi-sensory fusion is revolutionizing various fields of robotics, enabling more capable, adaptive, and intelligent systems.

- Autonomous Vehicles: In autonomous driving, sensor fusion combines data from cameras, LiDAR, radar, and IMUs to create a comprehensive understanding of the vehicle’s environment. This fusion enables the vehicle to detect obstacles, navigate safely, and make real-time decisions based on the surrounding traffic conditions.

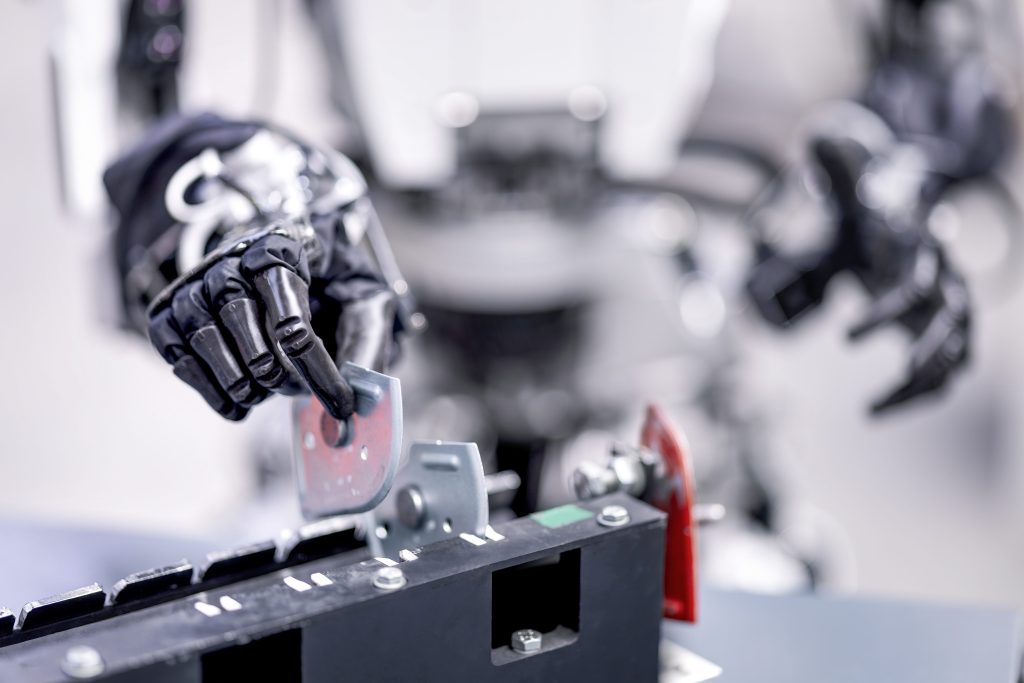

- Robotic Manipulation: In industrial robots, combining vision sensors with tactile feedback or force sensors allows robots to interact with objects in a more precise and adaptable way. This fusion is essential for tasks such as assembly, packaging, and sorting, where the robot must manipulate objects with varying shapes and weights.

- Search and Rescue: In search-and-rescue missions, robots can use a combination of infrared sensors, cameras, and LiDAR to navigate hazardous environments and locate survivors. Multi-sensory fusion helps ensure that the robot can operate effectively in dark or cluttered environments, even when some sensors are obscured or impaired.

- Healthcare Robotics: In medical robots, multi-sensory fusion is used to enhance surgical precision and enable real-time monitoring of patients. For example, combining imaging data with force feedback helps robotic surgeons perform minimally invasive surgeries with high accuracy.

Conclusion

The integration of multi-sensory fusion is a key enabler for achieving more accurate, reliable, and adaptive robotic perception. As robots continue to be deployed in complex and dynamic environments, the ability to process and fuse data from a variety of sensors will become increasingly important. While challenges remain, such as sensor calibration, real-time processing, and handling dynamic environments, ongoing research and technological advancements are paving the way for more sophisticated robotic systems that can operate autonomously and interact intelligently with the world.

By enhancing sensory accuracy through fusion, robots can better understand and navigate their surroundings, leading to improved performance across a wide range of applications, from autonomous vehicles to medical robots. The future of robotics lies in the seamless integration of diverse sensory modalities, providing robots with a richer and more nuanced understanding of the world around them.