1. Introduction

Computer vision has revolutionized the way robots perceive and interact with their surroundings. Traditionally, robots were designed to perform tasks based on static environments—object manipulation, assembly, or inspection tasks, for example, were carried out in controlled and predictable conditions. However, in the real world, environments are rarely static, and many tasks require robots to interact with dynamic objects—objects that move, change shape, or appear unexpectedly.

This ability to track moving objects is critical for robots in a variety of real-world applications. In autonomous vehicles, robots must track pedestrians, other vehicles, and obstacles. In industrial automation, robots must adapt to moving items on conveyor belts. In healthcare, robotic systems are needed to track and follow a surgeon’s hand during minimally invasive procedures. This article delves into the advancements, key techniques, and challenges associated with dynamic object tracking in robotics, which is becoming an indispensable tool for building intelligent, flexible, and adaptable systems.

2. Core Concepts in Dynamic Object Tracking

Dynamic object tracking involves continuously identifying and following objects as they move through an environment. The process of tracking moving objects in robotic systems typically consists of several stages, which we will outline here:

2.1 Computer Vision in Robotics

Computer vision is the field of artificial intelligence (AI) that deals with how computers can be made to gain understanding from digital images or videos. In the context of robotics, vision systems use cameras and other imaging sensors to capture visual data, which is then processed to perform tasks such as object detection, recognition, and tracking.

There are several core components involved in the visual processing pipeline:

- Image Acquisition: The robot uses various sensors, such as RGB cameras, depth cameras, or even thermal cameras, to capture the environment.

- Pre-processing: This step involves cleaning the images and preparing them for analysis. Techniques like edge detection, background subtraction, and noise removal are typically used.

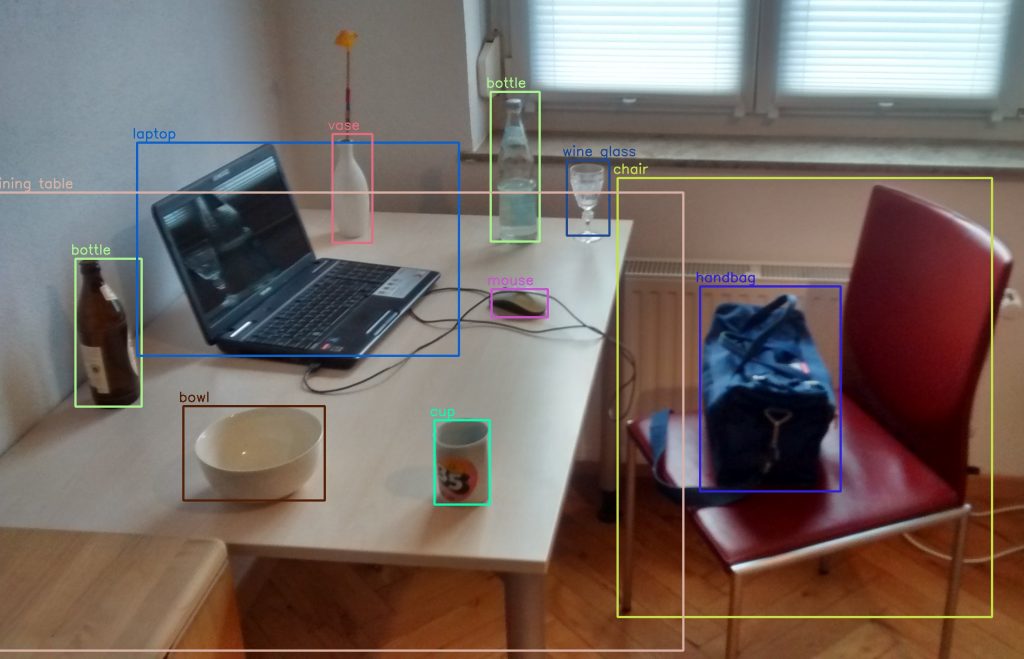

- Object Detection and Recognition: The robot identifies and classifies objects of interest.

- Tracking: Once an object is detected, the robot must follow its movements in real time, updating its position and predicting future motion.

2.2 Object Tracking Algorithms

Object tracking is the task of estimating the motion of a specific object over time. The robot must handle occlusions (when an object is temporarily blocked), scale variations (if the object appears closer or further), and changes in lighting conditions. The most commonly used algorithms for tracking dynamic objects include:

- Kalman Filters: A statistical method used for predicting an object’s position by considering its past trajectory and accounting for uncertainties.

- Particle Filters: These are more flexible than Kalman Filters and can handle more complex and nonlinear systems by using random samples.

- Optical Flow: This technique tracks the movement of objects based on the changes in pixel intensity across frames of video.

- Deep Learning-Based Approaches: Convolutional Neural Networks (CNNs) and Recurrent Neural Networks (RNNs) are often employed for feature extraction and motion prediction in more complex tracking scenarios.

2.3 Sensor Fusion

To enhance the robustness and accuracy of tracking, many robotic systems integrate multiple sensors (sensor fusion). For example, combining vision-based sensors (e.g., cameras) with Lidar or IMU (Inertial Measurement Units) can help the robot gain a more comprehensive understanding of its environment, especially in complex or cluttered settings.

3. Techniques for Dynamic Object Tracking

Dynamic object tracking requires a blend of real-time processing, predictive algorithms, and data fusion. Some of the most promising techniques in this field are:

3.1 Feature-Based Tracking

Feature-based methods involve tracking specific points or features that can be easily identified in each frame. Some of the most popular feature detectors include:

- SIFT (Scale-Invariant Feature Transform): Detects distinctive keypoints that are invariant to scale, rotation, and affine transformations.

- SURF (Speeded-Up Robust Features): A faster version of SIFT, used for real-time applications.

- ORB (Oriented FAST and Rotated BRIEF): A fast and efficient feature extraction algorithm that is suitable for real-time tracking.

These features help the robot track objects based on key points that are robust to changes in viewpoint, lighting, and perspective.

3.2 Deep Learning for Tracking

Convolutional Neural Networks (CNNs) have become indispensable for object tracking. Deep learning models can directly learn and generalize complex features that traditional algorithms might struggle to detect.

- Siamese Networks: Used for visual object tracking, where two networks are trained to compare image patches and determine if they belong to the same object.

- Recurrent Neural Networks (RNNs): For temporal consistency, RNNs can model the temporal sequence of images, ensuring that the robot follows objects smoothly even in challenging conditions.

3.3 3D Object Tracking

Tracking objects in three-dimensional space adds a layer of complexity but provides significant benefits, especially for autonomous robots. By using depth sensors or stereo vision, robots can:

- Track the 3D trajectory of objects.

- Estimate the velocity and directional movement of the object.

- Estimate object orientation in 3D space, enabling more complex tasks like grasping or collision avoidance.

4. Applications of Dynamic Object Tracking in Robotics

Dynamic object tracking enables a wide range of applications, which can be grouped into several key domains:

4.1 Autonomous Vehicles

Autonomous driving heavily relies on dynamic object tracking to ensure the safe and efficient navigation of vehicles. Some key tasks in this area include:

- Pedestrian Detection: Tracking moving pedestrians is crucial to avoid accidents.

- Vehicle Tracking: Understanding the motion of other vehicles on the road allows the autonomous car to make decisions in real time, such as braking, lane changing, or adjusting speed.

- Obstacle Avoidance: Tracking static and dynamic obstacles like road barriers or animals.

4.2 Industrial Robotics

In manufacturing settings, robots are increasingly used to perform tasks like assembly and quality inspection. Dynamic object tracking plays a vital role in:

- Parts Handling: Robots must track moving parts along conveyor belts and pick them up at the correct moment.

- Robot-Object Interaction: During assembly tasks, robots must follow moving components, adjust their trajectory, and interact dynamically with them.

4.3 Healthcare Robotics

In the healthcare industry, robots are used for delicate operations, physical therapy, and elder care. Dynamic object tracking can assist in:

- Surgical Robotics: Tracking surgical instruments to ensure precise positioning.

- Therapeutic Robotics: In rehabilitation, robots must track the position of limbs and provide adaptive feedback during therapy sessions.

4.4 Logistics and Delivery Robots

Delivery robots, such as those used by companies like Starship Technologies or Nuro, rely on dynamic object tracking to:

- Navigate sidewalks while avoiding pedestrians.

- Track moving packages during last-mile delivery.

- Optimize route planning by identifying and avoiding dynamic obstacles.

5. Challenges in Dynamic Object Tracking

While dynamic object tracking offers powerful capabilities, several challenges need to be addressed:

5.1 Real-Time Processing

Real-time performance is one of the biggest obstacles in dynamic object tracking. The computational load of processing multiple frames per second, particularly when using deep learning techniques, can demand high processing power and efficient optimization techniques.

5.2 Occlusions and Loss of Tracking

Occlusions occur when an object is temporarily blocked by another object or a part of the environment. This can result in the robot losing track of the object and requires advanced algorithms to recover the object once it reappears.

5.3 Changing Environments

In unpredictable and dynamic environments, lighting changes, motion blur, and background clutter can interfere with the robot’s ability to detect and track objects consistently.

5.4 Data Annotation and Training

For deep learning-based approaches, the need for large annotated datasets is a challenge. Collecting labeled data for dynamic object tracking across various environments is time-consuming and costly.

6. Future Directions

The field of dynamic object tracking in robotics is rapidly evolving, and several promising avenues for research and improvement exist:

6.1 Advanced Sensor Integration

As robots become more advanced, the integration of multiple sensors (such as cameras, depth sensors, Lidar, and IMUs) can improve object tracking, especially in complex or poorly lit environments.

6.2 Multi-Object Tracking

As robots are required to track multiple objects simultaneously, new algorithms and architectures will be needed to handle the complexities of interacting with more than one dynamic object in real time.

6.3 Autonomous Learning and Adaptation

Robots will be able to autonomously improve their tracking algorithms through reinforcement learning or unsupervised learning, making them more adaptable to changing environments and situations.

7. Conclusion

Dynamic object tracking is a critical capability for modern robotics, enabling machines to perceive and interact with their environment in real-time. The ability to track moving objects opens new possibilities across various industries, including autonomous driving, manufacturing, healthcare, and logistics. As technology continues to advance, the integration of cutting-edge machine learning techniques, sensor fusion, and real-time data processing will make robots even more capable and versatile. The continued progress in this field holds promise for a future where robots not only see the world around them but actively engage and respond to it with increasing accuracy and adaptability.